Artificial intelligence (AI) and deep learning (DL) use terms like neural networks often. These systems power facial recognition on phones. They also suggest shows you might like. For many people, this idea remains unclear. It brings to mind complex math only data scientists understand.

This article offers a clear guide to neural networks. It explains these strong AI tools. It breaks down their main parts. It shows how they learn. The article covers their impact on the modern world. You may be a tech fan. You may want to grasp AI’s uses. Or you may just want to know about future technology. This guide is for you. You will understand neural networks. You will see why they matter. You will learn how they help build the smart systems we use daily.

Contents

What a Neural Network Is

Imagine teaching a child to find a cat. You show pictures of cats. These show many types of cats from different angles. Each time, you say, ‘That is a cat,’ or ‘That is not a cat.’ The child starts to see patterns, like whiskers or pointy ears. Then, the child can find a cat they have not seen before.

A neural network works in a similar way. It uses computer rules. A neural network is a computer model. It takes ideas from the human brain’s neural networks. It finds patterns. It makes choices. It solves difficult problems. These tasks are hard for old computer programs that follow fixed rules.

The Brain as a Model

The human brain has billions of neurons, or nerve cells. These connect to each other. They send and receive electrical signals. Each neuron processes data. Then it sends data to thousands of other neurons. This network helps us learn. It helps us remember, see things, and make sense of the world.

Artificial neural networks, or neural networks, try to copy this brain process. They use artificial nodes, or perceptrons. They use connections with weights. These weights show how strong a connection is. Data enters the network. It moves through layers of linked nodes. Each node processes data based on its weight and bias. Then it makes an output. The network changes its internal weights and biases during training. This makes its outputs more correct. It is like a child learning from practice.

Think of it as a smart sorting machine. You give it data, like animal pictures. It learns to sort them into types, such as ‘cat,’ ‘dog,’ or ‘bird.’ It finds the sorting rules itself. You do not program them in. This ability to learn from data makes neural networks powerful. It makes them a core part of modern artificial intelligence.

Neural Network Parts

To understand how a neural network works, we must look at its main parts. These parts are simple on their own.

Neurons, or Nodes

A neural network has neurons. People also call them nodes or perceptrons. These are the main parts that process data. Each neuron does two main jobs:

- **Gets Inputs:** A neuron gets inputs from other neurons. These inputs come with a weight. The weight shows how much that input affects the neuron’s choice.

- **Does a Calculation:** The neuron adds up all its weighted inputs. It also adds a bias value. This bias helps the neuron learn patterns. It also helps it become active more easily.

- **Uses an Activation Function:** The neuron sends the result through an activation function. This function decides if the neuron should become active. It also decides what value it should send. This function helps the network learn complex patterns.

- **Sends Output:** The output of the activation function becomes input for the next neurons.

Connections, or Synapses

Neurons in a neural network connect to each other. This is like how synapses link brain neurons. These connections let data flow from one neuron to the next. Each connection has a weight. This weight is a number. It shows the connection’s strength. A large positive weight means the input helps activate the next neuron strongly. A large negative weight means it stops activation strongly. The network learns these weights during training.

A neural network adjusts these weights when it learns. This helps it make better predictions. Each neuron often has a bias value. The bias is like an extra input. It lets the neuron activate more or less easily. This happens no matter the weighted sum of its inputs. It helps the model fit data better.

Layers: Network Setup

Neural networks have layers of neurons. There are three types of layers:

- **Input Layer:** This is the network’s first layer. Each neuron here takes one feature of the input data. For example, if you feed an image with 28×28 pixels, the input layer might have 784 neurons. Each neuron shows the light level of one pixel. This layer only gets and sends raw data. It does not do complex calculations.

- **Hidden Layers:** These layers sit between the input and output layers. A network can have one or many hidden layers. Deep learning happens here. More hidden layers mean a deeper network. Each neuron in a hidden layer gets inputs from the layer before it. It does calculations. Then it sends its output to the next layer. These layers find harder patterns in the data. For example, in image tasks, the first hidden layer might find edges. The next might combine edges to make shapes. Later layers might find abstract features like eyes.

- **Output Layer:** This is the network’s final layer. Its neurons make the network’s prediction. The number of neurons here depends on the problem. For a yes/no problem, there might be one output neuron. For many types, there might be one output neuron for each type. The output often shows the chance of a certain result.

Activation Functions: Neuron Choosers

A neuron sums its weighted inputs and adds the bias. Then it sends this result through an activation function. This function adds a key feature to the network. Without it, a neural network would just be a simple model. It could not learn complex patterns.

Here are some common activation functions explained simply:

- **Sigmoid Function:** This function changes the input value to a number between 0 and 1. It was common in earlier networks. It often worked in output layers where chances were needed. It acts like a smooth on/off switch.

- **ReLU (Rectified Linear Unit):** This is a popular activation function today. It is simple. If the input is positive, it outputs the input value. If the input is negative, it outputs zero. This simplicity makes networks train faster. It helps solve problems where updates get too small. It acts like a simple on switch for positive values.

- **Tanh (Hyperbolic Tangent):** This is like sigmoid. It changes the input to a range between -1 and 1. People often choose it over sigmoid in hidden layers. Its output centers around zero, which can help training.

The choice of activation function changes how well a network learns. It lets the network model complex links in data. This makes it far more powerful than old algorithms.

How a Neural Network Learns

Neural networks learn from data. This is their most interesting part. You do not write rules for every case. A neural network finds the rules itself. This learning process repeats. It involves several key steps.

Data: Learning Fuel

A child learns best with many examples. Neural networks need large amounts of data to learn well. This data splits into two sets:

- **Training Data:** This is the large data set the network uses to learn. It has input and output pairs. Examples are cat pictures labeled ‘cat,’ or customer data with buying choices. The network sees this data. It tries to predict. Then it adjusts based on how correct its prediction was.

- **Testing Data:** After training, the network is checked on new data. This testing data helps see how well the network uses its learning on new examples.

The quality and amount of training data are very important. Bad or too little data can make a network work poorly. It can also make unfair choices.

Forward Propagation: Making a Prediction

Forward propagation sends input data through the network. It goes from the input layer, through hidden layers, and to the output layer. This process makes a prediction. Here is how it works:

- **Input:** Raw data, like image pixel values, enters the input layer.

- **Weighted Sum:** Each neuron in the first hidden layer gets the inputs. It multiplies them by their weights. It sums them up. Then it adds its bias.

- **Activation:** This sum then goes through the neuron’s activation function.

- **Repeat:** This neuron’s output becomes an input for neurons in the next hidden layer. The process repeats until data reaches the output layer.

- **Prediction:** The output layer makes the network’s final prediction. For example, it says, ‘This is 95% a cat,’ or ‘The stock price will be $150.’

At the start of training, weights and biases are random. The network’s predictions will likely be very wrong. Learning begins here.

Loss Function: Measuring the Error

The network makes a prediction. Then it needs to know how wrong its prediction was. The loss function does this. It calculates the difference between the network’s prediction and the actual correct answer from the training data. A higher loss number means the network’s prediction was worse.

For example:

- The network predicts 0.8 for a cat image. The actual label is 1.0. The loss function will calculate a small error.

- The network predicts 0.1 for a cat image. The loss function will calculate a large error.

Training aims to make this loss function as small as possible. The goal is to make network predictions very close to the correct answers.

Backpropagation: Learning from Mistakes

Backpropagation is the main rule that lets a neural network learn. After calculating the loss, backpropagation works backward through the network. It goes from the output layer to the input layer. It finds out how much each weight and bias helped cause the total error.

Imagine you bake a cake. It does not taste right. You try to find out what ingredient was wrong. Backpropagation does something similar for the network. It finds the slope of the error for each weight and bias. This slope shows how much each weight and bias needs to change to reduce the loss. This process involves complex math. But its idea is to send the error backward through the layers. Each connection then knows how to change its weight. This makes the output more correct next time.

Optimization (Gradient Descent): Getting Better

Backpropagation finds how much each weight and bias needs to change to reduce error. Then an optimizer algorithm makes those changes. Gradient Descent is the most common optimizer. It works in steps:

- **Calculate Gradients:** Backpropagation shows the error slope for each weight and bias.

- **Take a Step:** Gradient Descent moves a small step in the opposite direction of the slope. The slope points uphill. We want to go downhill to lessen the loss. A learning rate sets the step size.

- **Repeat:** This whole process repeats many times for the entire training data set. Each cycle through the data set is an epoch.

Over many epochs, the network keeps changing its weights and biases. It slowly moves down the error slope. It finds weights and biases that make the loss function as small as possible. At this point, the network has learned the patterns in the data. It can make correct predictions on new, unseen data.

This repeating process of prediction, error checking, error finding, and adjustment helps neural networks gain intelligence.

Neural Network Types

All neural networks have main parts. These include neurons, layers, weights, and activation functions. But they come in many forms. Each form is for different tasks.

Feedforward Neural Networks (FNNs): The Basic Kind

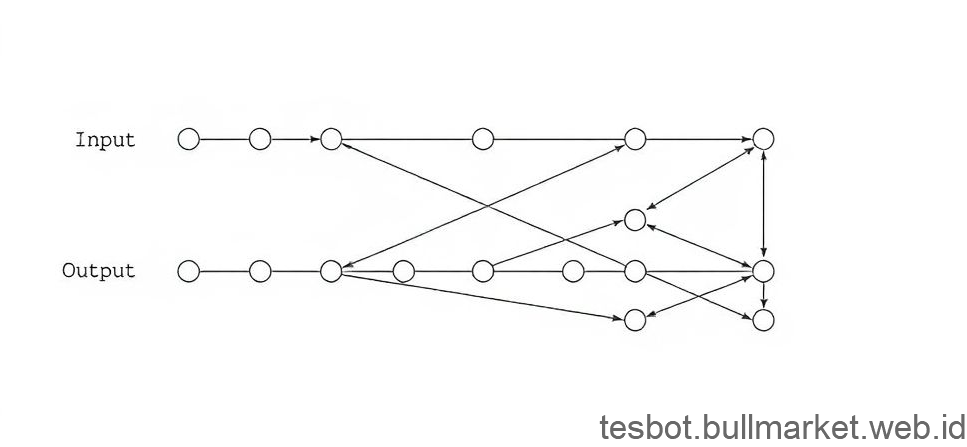

People also call them Multi-Layer Perceptrons (MLPs). FNNs are the simplest and first type of artificial neural networks. In an FNN, data moves in only one direction. It goes from the input layer, through hidden layers, and out to the output layer. The network has no loops.

- **Use:** Good for sorting data, like finding spam or predicting customer churn. They are also good for predicting numbers, like house prices. They are basic. People often use them as parts for more complex networks. They are good when data has no special layout or time order.

- **Example:** A simple assembly line. Products move one way, step-by-step, until done.

Convolutional Neural Networks (CNNs): Seeing Images

CNNs are a special neural network type. They are mainly for data with a grid shape, like images. They are very good at image recognition and finding objects. They also handle other computer vision tasks.

- **Key Ideas:**

- **Convolutional Layers:** Neurons do not connect to every neuron in the past layer. CNNs use filters. These filters scan small parts of the image. Each filter learns to find features like edges or shapes.

- **Pooling Layers:** These layers reduce data size. They simplify the data. This makes the network stronger against small shifts or changes in the input.

- **Fully Connected Layers:** After some convolutional and pooling layers, the data flattens. It then goes into normal feedforward layers for final sorting.

- **Use:** Changed computer vision. They work in facial recognition and self-driving cars. They help in medical image analysis, like finding tumors. They also tag images. They can learn features automatically from raw image data.

- **Example:** A detective looks for clues in parts of a crime scene. Then the detective puts clues together to find the culprit.

Recurrent Neural Networks (RNNs): Remembering Sequences

RNNs process sequential data. The order of data matters here. Unlike FNNs, RNNs have memory. They can use past data to affect the current output. Internal loops make this possible. This lets data last.

- **Key Idea:**

- **Hidden State (Memory):** Each neuron in an RNN gets the current input. It also gets the output from the past step of that same neuron. This lets the network remember past data in the sequence.

- **Limits:** Simple RNNs struggle to remember data from much earlier in a sequence. This led to better RNNs, like Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs).

- **Use:** Good for natural language processing (NLP). They work in speech recognition, machine translation, and time-series prediction, like stock prices.

- **Example:** Reading a sentence word by word. To know the current word, you need to remember past words.

Transformers: New Language Models

RNNs were good for sequences. But Transformers have replaced them in many NLP tasks since 2017. Transformers use an attention method. This lets them weigh the importance of different input parts when predicting. They do not process data in the same step-by-step way as RNNs. This allows more tasks at once. They handle very long sequences.

- **Key Idea:**

- **Attention Mechanism:** This lets the network focus on specific input parts. These parts are most important for the task. Their position does not matter. For example, when translating a sentence, it can focus on the most important words in the source sentence for each word it makes.

- **Use:** Powers large language models, like GPT-3 and BERT. They are very good at machine translation and text generation. They also do summaries and answer questions. Their ability to handle long links well changes things.

- **Example:** Instead of reading a book word by word, a Transformer quickly scans many parts of the book. It focuses on the most important sentences for a question, no matter where they appear.

Here is a quick look at some neural network types:

| Feature | Feedforward NN (FNN/MLP) | Convolutional NN (CNN) | Recurrent NN (RNN) | Transformer |

|---|---|---|---|---|

| Data Type | Table data, general numbers | Images, videos, grid data | Sequence data (text, time-series, audio) | Sequence data (mainly text, long sequences) |

| Data Flow | One way, no memory | One way, local receptive fields | Sequence, internal memory of past steps | Not sequence, parallel work with attention |

| Main Method | Layers of linked neurons | Convolutional filters, pooling | Recurrent links, hidden state | Self-attention method |

| Strength | Simple sorting/prediction, basic | Image recognition, object detection, computer vision | Natural Language Processing (older), time-series analysis | Top Natural Language Processing, large language models, long links |

| Common Use | Spam finding, simple predictions | Facial recognition, medical images, self-driving cars | Machine translation (older), speech recognition | Chatbots, content making, translation, summaries |

Knowing these different forms helps show how useful and specialized neural networks are. They solve many AI problems.

Neural Networks Today: Real Uses

Neural networks are no longer only in research labs. They are now part of our daily lives. They often work unseen. They make our experiences better. They solve difficult problems. Here are some real-world uses:

Image Recognition and Computer Vision

This area shows some of the best results for neural networks, especially CNNs.

- **Facial Recognition:** Used in phone unlock systems. It works in security cameras and social media for tagging friends.

- **Object Detection:** Helps self-driving cars find walkers, other cars, road signs, and blocks. It also works in stores for tracking items and watching.

- **Medical Imaging:** Helps doctors find illnesses. Examples are cancer, tumors, and eye problems. They find these from X-rays, MRIs, and CT scans. They often do this faster than humans.

- **Content Watching:** It finds and flags bad content on social media.

Natural Language Processing (NLP)

Neural networks, especially RNNs, LSTMs, and now Transformers, have changed how machines understand human language.

- **Voice Assistants:** Siri, Alexa, and Google Assistant all use neural networks. They recognize speech and understand natural language.

- **Machine Translation:** Google Translate and similar tools use neural networks. They give better translations between languages.

- **Chatbots and Virtual Agents:** Customer service chatbots and AI helpers use neural networks. They understand user questions. They make clear answers.

- **Feeling Analysis:** Companies use neural networks. They check public opinion about products from social media posts and reviews.

- **Text Making:** They create human-like text for articles, ads, stories, and code.

Recommendation Systems

Have you wondered how Netflix knows what movie you will like next? Or how Amazon suggests things you might buy? Neural networks are the main part of these systems.

- **Online Sales:** They make product suggestions personal. They use browsing history, past buys, and actions of similar users.

- **Streaming Services:** They suggest movies, TV shows, and music that match your taste.

- **Social Media:** They set up your feed. It shows content most important and interesting to you.

Predictive Analysis

Neural networks are good at finding patterns in large data sets. They use these patterns to make future predictions.

- **Money Trading:** They predict stock prices and market changes. They also find fake deals.

- **Weather Forecasting:** They make weather models more correct. They do this by checking complex air data.

- **Health Care:** They predict illness outbreaks. They find at-risk patients. They also make treatment plans better.

- **Energy Control:** They predict energy needs. They also make grid work better.

Autonomous Vehicles

Self-driving cars are one of the most complex uses for neural networks. They need many AI methods working together.

- **Seeing:** They find lane lines, traffic lights, walkers, and other cars. They use cameras, lidar, and radar.

- **Deciding:** They predict what other road users will do. They plan safe paths.

- **Control:** They make driving moves smooth.

Health Care and Drug Search

Beyond medical imaging, neural networks make progress in other health areas:

- **Drug Search:** They speed up finding new drug parts. They predict how well they will work.

- **Personal Health Care:** They check patient data. This helps tailor treatments. They predict how each person will respond to medicine.

- **Illness Diagnosis:** They help in early and correct diagnosis of various problems. They do this by checking symptoms and medical records.

These examples are only a small part of neural networks’ many uses. Their ability to learn from data and find complex patterns makes them very useful tools in almost every area. They drive new ideas and make things work better.

Neural Network Limits and Problems

Neural networks have great abilities. But they are not perfect. They have their own problems. Researchers are working to fix these.

Data Dependence

- **Need for Data:** Neural networks, especially deep ones, need a lot of data. They need huge amounts of labeled data to train well. Getting, cleaning, and labeling this data costs a lot. It takes much time and many resources.

- **Bad In, Bad Out:** If the training data is poor, incomplete, or has biases, the network will learn those flaws. It will then make wrong or biased outputs.

Computer Resources

- **High Need:** Training large and deep neural networks needs much computer power. This often means special hardware like GPUs. This can be a big cost for many groups.

- **Energy Use:** The energy for training and running these large models creates a big carbon footprint. This raises environmental worries.

Black Box Problem (Understanding)

- **No Clarity:** A big problem is that deep neural networks are often like black boxes. They can be very correct. But it is often hard to know why they made a certain choice. It is hard to follow the exact logic through millions of linked weights.

- **Trust and Responsibility:** This lack of understanding is a problem in important uses. These include health care (finding illnesses) or legal systems (predicting repeat crimes). Knowing why is key for trust, responsibility, and fixing errors.

Bias and Fairness Issues

- **Learned Bias:** Training data might show existing biases in society. Examples are past unfair treatment or too few people from certain groups. The neural network will learn these biases. It will keep them going. This can lead to unfair results. For instance, facial recognition might work poorly on some groups. Loan systems might favor certain groups.

- **Right and Wrong:** Fixing bias and making AI systems fair is a hard right-and-wrong and technical problem. It needs careful data selection, bias checks, and strong model testing.

Generalization Problems (Overfitting)

- **Overfitting:** A common problem. A network learns the training data too well. It remembers the noise and specific quirks of that data. It does not learn the real patterns. When it sees new data, an overfit model works poorly. It has not learned to be general.

- **Underfitting:** This happens when a model is too simple. Or it has not been trained enough. It fails to capture the data’s complex parts. Both lead to poor work on real tasks. People use methods like regularization and cross-validation to help with these issues.

Attacks

- **Easy to Change:** Neural networks can be open to attacks. Small changes, not seen by humans, are added to input data. An example is an image. These changes make the network guess wrong. A self-driving car’s vision system could be tricked. A stop sign might look like a speed sign due to small, placed noise. This is a big security worry for important uses.

These limits show that neural networks are strong, but not perfect. Current research works to make them stronger, clearer, fairer, and work better.

Neural Networks: What Comes Next?

The field of neural networks changes very fast. New discoveries happen often. They push what AI can do. Here are some main trends and future paths:

Explainable AI (XAI)

Neural networks are used more and more in important areas. So, people want more clarity and understanding. Explainable AI (XAI) is a new field. It builds ways to help humans understand AI model choices. It helps humans trust AI outputs.

- **Goal:** To move past the black box problem. It wants to show *why* a neural network made a choice, not just *what* it chose.

- **Effect:** Very important for use in areas like health care, money, and law. It also helps find and fix biases.

Edge AI (AI on Devices)

Many strong AI models now run in big data centers. Edge AI focuses on running AI models directly on local devices. These include phones, smart cameras, and IoT devices. Data does not go to the cloud for processing.

- **Good Points:** Better privacy. Data stays on the device. Faster responses. Less network use. Better reliability. It relies less on network links.

- **Problem:** Making strong neural networks work well on devices with limited power.

- **Effect:** Makes AI experiences smarter, faster, and more private in daily gadgets.

Generative AI (Creative AI)

Generative AI means neural networks that can make new, original content. They do not just check or sort old data. This is a very exciting and fast-growing area.

- **Examples:**

- **Text Making:** Creating human-like articles, stories, code, and talks.

- **Image Making:** Creating real or art images from text words.

- **Music Making:** Making new music.

- **Video Making:** Creating real video clips.

- **Effect:** Changing creative work and content creation. It also affects science research, like making new protein shapes.

Hybrid Models and Neuro-Symbolic AI

Neural networks are good at finding patterns. But they often have trouble with logic and common sense. Humans have these skills. Hybrid models aim to mix neural network strengths with old AI methods. These include rule-based systems.

- **Goal:** To make AI systems that learn from data. They also reason, understand context, and explain choices in a more human way.

- **Effect:** Could lead to stronger, clearer, and more general AI systems. They can bridge the gap between pattern finding and logic.

Neuromorphic Computing

Neuromorphic computing takes ideas directly from the human brain. It is a new field. It designs computer chips and systems. These copy the structure of brain neural networks more closely than old computer designs.

- **Goal:** To build very energy-saving AI hardware. This hardware can process data in many ways at once. It can work based on events, like the brain.

- **Effect:** May open up new levels of AI work. This is true for fast, low-power uses.

The future of neural networks promises smarter, more useful, and smoothly linked AI systems. They will bring us personal helpers that truly understand our needs. They will also help solve big human problems. These networks will stay at the front of new technology. Knowing about these changes will help you be ready for the AI world of tomorrow.

Conclusion

We explored neural networks. We broke down their inner workings into clear ideas. We learned that neural networks are computer models. They get ideas from the human brain. They find patterns from large amounts of data. Layers of linked neurons build them. These neurons process data. They adjust weights and biases. They do this through processes like forward propagation, backpropagation, and optimization. They do this to reduce prediction errors.

We saw foundational Feedforward Neural Networks. We looked at Convolutional Neural Networks for images. We discussed Recurrent Neural Networks that remember sequences. And we covered the new Attention-based Transformers. Each network type fits specific tasks. These strong tools are not just ideas. They are active in shaping our world. They power facial recognition on phones. They make suggestions on streaming services. They help with medical checks. They guide self-driving cars.

We also saw their limits. These include needing much data. They need much computer power. There is the black box problem, and the issue of bias. But the future of neural networks looks very good. Ongoing research works toward clearer, faster, more creative, and stronger AI systems.

Understanding neural networks is not just for computer scientists anymore. It is becoming key for anyone curious about what shapes our future. The more we grasp these basic ideas, the better we can use and check the AI systems that are more and more a part of our lives.

Start by trying an AI tool today. See how it works. Think about the neural networks working beneath its surface. Understanding AI has just started!

“,