Ideas about artificial beings are old. People imagined automatons and thinking machines long ago. These concepts set the stage for later AI work. Ancient stories speak of statues coming to life. Myths featured mechanical servants. These old tales show a human desire to create intelligence.

Contents

- 0.1 Early Automatons and Mythological Concepts

- 0.2 Early Logical Reasoning and Mathematical Foundations

- 1 Birth of a Field: The Mid-20th Century Foundations

- 2 Hype, Hope, and AI Winters: Challenges and Retrenchment

- 3 The Resurgence: Machine Learning and Data-Driven AI

- 4 The Deep Learning Revolution: Modern AI’s Breakthroughs

- 5 AI in the Modern World: Integration and Impact

- 6 The Future Horizon: Opportunities and Ethical Considerations

- 7 Conclusion

Early Automatons and Mythological Concepts

Ancient Greek myths tell of Talos. Talos was a giant bronze automaton. He guarded the island of Crete. He defended its people. He moved and acted on his own. He was a protector. He showed early ideas of artificial life.

The Greek inventor Hero of Alexandria built many machines. He created automatic doors and figures that moved. These were not truly intelligent. Still, they were early examples of mechanical automation. They sparked thoughts about non-living things performing tasks.

In later centuries, craftsmen built complex clocks. They made moving figures for entertainment. These machines mimicked human actions. They danced, played music, or wrote words. They showed technical skill. They also hinted at artificial actions.

Early Logical Reasoning and Mathematical Foundations

Logic and mathematics also played a role. Thinkers developed rules for clear thought. Aristotle’s syllogisms are an example. These provided a system for reasoning. They gave a structure for conclusions.

Later, mathematicians explored computation. Gottfried Wilhelm Leibniz imagined a calculus ratiocinator. This machine would solve arguments with logic. He envisioned a universal language for reasoning. This showed a desire for automated thought processes. George Boole developed Boolean logic in the 19th century. This system uses true/false values. It became a base for computer operations. These steps laid abstract groundwork. They helped prepare for AI’s logical side.

Birth of a Field: The Mid-20th Century Foundations

The mid-20th century saw AI come to life. New ideas and machines made this possible. Electronic computers began to appear. These machines could process information fast. Thinkers started to consider machine intelligence seriously.

Alan Turing and the Dawn of Computing

Alan Turing was a British mathematician. He asked a big question in 1950. Can machines think? He proposed the Imitation Game. We now call this the Turing Test. A human talks to a hidden computer and a hidden human. If the human cannot tell which is the computer, the machine passes. This test offered a way to measure machine intelligence. It gave a goal for AI researchers. Turing’s work also helped develop early computers. His ideas were central to computer science.

The Dartmouth Workshop and the Coining of AI

The summer of 1956 was a turning point. John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon organized a workshop. It happened at Dartmouth College. Many top researchers attended. They gathered to discuss thinking machines. John McCarthy used a new term for the first time: artificial intelligence. This workshop is seen as AI’s birth. It gave the field its name. It also set its early goals. Researchers aimed to make machines that could learn, solve problems, and understand language.

Early AI Programs and Symbolic AI

Following Dartmouth, early AI programs emerged. These programs used a symbolic approach. They worked with symbols and rules to represent knowledge. They mimicked human reasoning steps. The Logic Theorist was one such program. Allen Newell, Herbert Simon, and Cliff Shaw built it in 1956. It proved math theorems. It showed that machines could perform complex logical tasks.

Another notable program was ELIZA. Joseph Weizenbaum created ELIZA in 1966. ELIZA was a chatbot. It simulated human conversation. It used simple pattern matching. It reflected user input back as questions. Many people thought ELIZA truly understood them. This showed the power of simple rules. It also revealed how easily humans project understanding onto machines.

The General Problem Solver (GPS) was another early effort. Newell and Simon built GPS too. GPS aimed to solve any problem. It used a method called means-ends analysis. This involved reducing the difference between a current state and a goal state. These early programs showed promise. They laid foundations for later AI work.

Hype, Hope, and AI Winters: Challenges and Retrenchment

AI’s early success led to high hopes. Many people expected rapid progress. But the reality was harder than expected. AI faced major technical limits. This led to periods of reduced funding and interest. These are known as “AI winters.”

Overpromising and Underdelivery

Early AI researchers made bold claims. They predicted machines would soon act like humans. Some even thought intelligent machines would appear within a decade. These predictions did not come true. Computers lacked the processing power needed. Data was scarce. Researchers also did not fully understand human intelligence. These gaps led to disappointment. Expectations became too high. The actual progress seemed slow in comparison.

Expert Systems and Their Limitations

In the 1970s and 1980s, expert systems gained focus. These programs captured human expert knowledge. They used rules. They solved problems in specific areas. MYCIN was an early example. It helped doctors diagnose blood infections. It recommended treatments. These systems performed well in narrow domains. They worked in medicine, geology, and finance. However, they had limits. They needed human experts to provide all rules. They did not learn new rules easily. They could not adapt outside their specific field. Building them was also expensive. They did not scale well.

Funding Cuts and Public Disillusionment

The limitations of expert systems became clear. The bold promises of earlier years were unmet. This caused a loss of trust. Funding for AI research decreased sharply. Governments and companies reduced their investments. This period of reduced funding is the first AI winter. Researchers had fewer resources. Progress slowed. The public also lost interest. AI seemed stuck. It had not delivered on its grand visions.

The Resurgence: Machine Learning and Data-Driven AI

AI saw a new dawn in the late 1990s and early 2000s. A shift occurred. Researchers moved from symbolic AI to data-driven methods. Machine learning became central. This new focus brought new progress. Three factors drove this change: more data, faster computers, and new algorithms.

Probabilistic Approaches and Neural Network Revival

Earlier, AI used rigid rules. New methods embraced uncertainty. They used probability. Bayesian networks became popular. These networks model uncertainty. They infer probabilities from data. This helped AI systems make decisions with incomplete information. Also, neural networks saw a revival. These networks mimic the brain’s structure. They had existed for decades. But now, new training methods appeared. More computing power also helped. Researchers like Geoffrey Hinton made advances. They showed neural networks could learn complex patterns. This set the stage for later breakthroughs.

The Rise of Big Data and Increased Computational Power

The internet brought a flood of data. Companies gathered vast amounts of information. This “big data” included text, images, and user actions. Machine learning needs much data to learn. More data meant better learning. Computers also became much faster. Graphics Processing Units (GPUs) grew powerful. GPUs could perform many calculations at once. This speeded up neural network training. These two factors together changed the game. They gave machine learning the fuel it needed.

Significant Advances in Traditional Machine Learning

Beyond neural networks, other machine learning methods improved. Support Vector Machines (SVMs) became popular. They work well for classification tasks. Decision trees and random forests also saw use. These methods found patterns in data. They helped with many tasks. Spam filters use these ideas. Recommendation systems also apply them. AI began to offer practical benefits. It moved beyond labs into everyday software.

| Year | Event/Development | Significance |

|---|---|---|

| 1950 | Turing Test Proposed | Defined a benchmark for machine intelligence. |

| 1956 | Dartmouth Workshop | Coined the term Artificial Intelligence, marking the field’s birth. |

| 1966 | ELIZA Chatbot | One of the first chatbots, simulating human conversation. |

| 1997 | Deep Blue defeats Garry Kasparov | Demonstrated computers could beat human champions in complex games. |

| 2012 | ImageNet Challenge (AlexNet) | Catalyzed the deep learning revolution in image recognition. |

| 2016 | AlphaGo defeats Lee Sedol | Showcased deep reinforcement learning’s power in complex strategy games. |

| 2017 | Transformer Architecture | Introduced a new model for natural language processing, changing the field. |

| 2022 | ChatGPT Release | Brought advanced conversational AI to a wide public audience. |

The Deep Learning Revolution: Modern AI’s Breakthroughs

The early 2010s marked a major shift. Deep learning took center stage. Deep learning uses very large neural networks. These networks have many layers. They can find complex patterns in vast datasets. This led to breakthroughs in many areas. Computers began to see, hear, and understand better.

Convolutional Neural Networks (CNNs) and Image Recognition

Image recognition saw huge progress. Convolutional Neural Networks (CNNs) were key. Yann LeCun created early CNN ideas in the 1980s. Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton then developed AlexNet in 2012. AlexNet won the ImageNet Large Scale Visual Recognition Challenge. It cut error rates greatly. This event showed deep learning’s power. CNNs became the standard for image tasks. They power facial recognition systems. They also help self-driving cars “see” the road. They changed how computers interpret visuals.

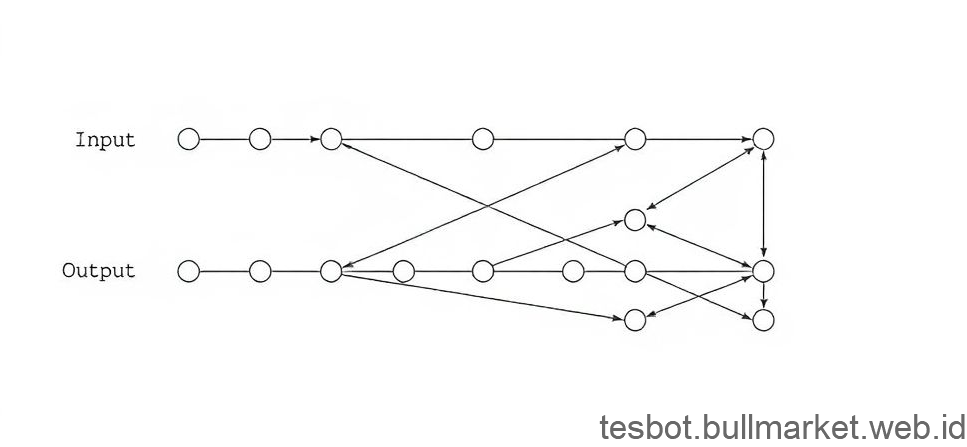

Recurrent Neural Networks (RNNs) and Natural Language Processing (NLP)

Understanding human language also improved. Recurrent Neural Networks (RNNs) played a role here. RNNs can process sequences of data. They work well with text and speech. They hold memory of past inputs. This helps them understand context. They found use in speech recognition. They also helped with machine translation. RNNs allowed computers to process sentences. They began to grasp the flow of language. This opened doors for better human-computer talk.

Generative AI and Transformer Models

A new wave of AI emerged around 2017. This was generative AI. It creates new content. This content can be text, images, or audio. Transformer models are at its heart. These models handle long-range dependencies in data. They process text in new ways. Researchers from Google introduced the Transformer in 2017. They called their paper “Attention Is All You Need.”

This architecture improved natural language processing greatly. Models like Generative Pre-trained Transformers (GPT) use this design. OpenAI built GPT models. They can write text. They answer questions. They summarize documents. DALL-E is another example. It creates images from text descriptions. Midjourney and Stable Diffusion also create images. These tools show AI’s creative side. They allow new forms of human-AI collaboration. They are changing content creation.

AI in the Modern World: Integration and Impact

AI is no longer just a research topic. It is part of daily life. It helps many industries. Its tools are everywhere. We use AI without even knowing it sometimes. It helps us find information. It helps us make choices. AI helps systems run better.

Everyday Applications

Voice assistants are common. Siri, Alexa, and Google Assistant use AI. They understand speech. They answer questions. They control smart devices. Recommendation systems are also AI-driven. Netflix suggests movies. Amazon suggests products. These systems learn preferences. They provide personalized choices. Spam filters use AI. They stop unwanted emails. Navigation apps use AI to find routes. They predict traffic. Many phones use AI for better photos. AI cleans up images. It makes faces look sharper. These tools make life easier.

AI in Industry

AI helps many sectors. In healthcare, AI assists with diagnosis. It analyzes medical images. It helps find diseases early. It aids in drug discovery. Financial institutions use AI for fraud detection. AI spots unusual transactions. It protects money. It also helps with stock trading. Autonomous vehicles use AI to drive. They sense their surroundings. They make driving decisions. In manufacturing, AI optimizes production. It predicts machine failures. It improves efficiency. AI helps agriculture too. It monitors crop health. It suggests watering schedules. AI is a tool for progress in many fields.

The Future Horizon: Opportunities and Ethical Considerations

AI keeps growing fast. What comes next? Researchers aim for more advanced AI. We also need to think about how AI impacts people. Responsible use is very important.

Exploring Artificial General Intelligence (AGI) and Beyond

Current AI performs specific tasks. It is narrow AI. For example, one AI plays chess. Another recognizes faces. Artificial General Intelligence (AGI) is a different goal. AGI would perform any intellectual task a human can. It would learn across many domains. It would adapt to new situations. This is a long-term goal. Achieving AGI would open new possibilities. It would change how we live and work. Some researchers also talk about Artificial Superintelligence. This would be AI far smarter than any human. These ideas are far off. They spark deep thought about the limits of intelligence.

The Societal Implications and Need for Responsible AI

AI’s growing power raises questions. We must consider its effects on jobs. We must think about privacy. How does AI use our data? We need to prevent bias in AI systems. AI models can reflect biases in their training data. This can lead to unfair results. For example, a facial recognition system might work less well for certain groups. Developers must build AI fairly. Governments must make rules for AI use. Ethics guide AI development. We must build AI that helps all people. We must ensure it respects human values. This is a big challenge. It also presents a big chance. What do we want AI to do for us?

We want AI to serve humanity. We want it to solve hard problems. We want it to improve quality of life. Thinking about ethics helps us guide AI. It helps us make better choices. This thinking ensures AI helps society. It supports positive future outcomes.

Conclusion

AI has come a long way. It started as old ideas about thinking machines. It grew into a complex field. From logical reasoning to deep learning, AI’s story is one of constant change. Each era brought new methods and challenges. From early chatbots to powerful image generators, AI has changed much. It impacts our daily lives. It drives innovation in many industries.

The journey continues. Researchers push the limits of what AI can do. They also consider its societal impact. Understanding AI’s past helps prepare us for its future. Explore the history more. See how deep learning works. Consider AI’s ethical choices. Learn about responsible AI development. Stay informed about these changes.

`