The idea of artificial beings is very old. Ancient myths describe intelligent robots. Greek myths speak of Talos, a bronze giant. He protected Crete. These stories show a human desire. People wished to create intelligent life. This desire formed AI’s first root.

Philosophers also laid important groundwork. Aristotle studied logic. He developed syllogisms. These are patterns of reasoning. They use premises to reach a conclusion. Syllogisms offered a formal way to think. This was a step toward automated reasoning.

Later, Gottfried Leibniz imagined a “calculus ratiocinator.” This was a universal logical language. He believed it could solve all disputes. This machine would automate reasoning. It would process thoughts like numbers. Leibniz’s vision outlined a mechanical system for logic. It foreshadowed computer science and AI.

Early mechanical automata also showed AI’s potential. Heron of Alexandria made automatic doors. He also built moving figures. These machines operated without human help. During the 18th century, Jaques de Vaucanson built impressive automatons. He created a mechanical duck. It could eat and digest food. He also made a flute player. These were complex machines. They mimicked living actions. They hinted at artificial intelligence. These early devices were not truly intelligent. Yet, they showed what machines might one day achieve.

Contents

- 1 The Dawn of AI: Post-War Visions and Pioneering Algorithms

- 2 The First AI Winter and Resurgence: Limitations and Expert Systems

- 3 The Second AI Winter and the Quiet Rise of Machine Learning

- 4 The Deep Learning Revolution: Big Data and Computational Power Unleashed

- 5 AI in the Modern Era: Widespread Applications and Societal Transformation

- 6 The Future of AI: Ethical Considerations, Challenges, and Emerging Frontiers

- 7 Conclusion

The Dawn of AI: Post-War Visions and Pioneering Algorithms

The 20th century brought electronic computers. These machines processed information fast. This speed made new ideas possible. Alan Turing was a key figure. He proposed the Turing Test in 1950. This test would check machine intelligence. A machine passes if a person cannot tell it from a human. This test gave AI a goal. It offered a way to measure success.

The term “Artificial Intelligence” came in 1956. This happened at the Dartmouth Conference. John McCarthy organized the event. He gathered many researchers. They included Marvin Minsky and Claude Shannon. They met to explore machine intelligence. They discussed how machines might simulate learning. They talked about other features of intelligence. This conference marked AI’s official birth as a field.

Early AI programs soon appeared. Allen Newell and Herbert A. Simon created the Logic Theorist in 1956. It solved math problems. It proved theorems. It used human-like reasoning. This was a major step. Joseph Weizenbaum developed ELIZA in the mid-1960s. ELIZA was a computer program. It mimicked a psychotherapist. It processed human language. It responded in a simple way. It did not understand meaning. But it showed how machines could interact. These programs were basic. They set the stage for much future work.

Researchers in this period were optimistic. They predicted rapid progress. They believed intelligent machines were close. Many early projects received funding. This early period was full of excitement. It laid the foundation for decades of AI research.

The First AI Winter and Resurgence: Limitations and Expert Systems

Optimism soon faced reality. Predictions from the early AI years were too bold. Researchers found AI problems harder than expected. Programs worked well for simple tasks. They failed when problems became complex. Funding for AI research decreased. This period became known as the first AI winter. It lasted from the mid-1970s to the early 1980s. Governments and companies stopped investing. They saw few practical results.

AI researchers learned from these setbacks. They shifted focus. They moved away from general intelligence. They began building rule-based expert systems. These systems focused on specific knowledge areas. They captured human expertise. They used if-then rules to make decisions. They did not try to mimic broad intelligence. They solved narrow problems. They worked well within their defined limits.

Expert systems brought AI back into the spotlight. MYCIN was an early example. It diagnosed blood infections. It recommended treatments. Another system, DENDRAL, identified chemical structures. These systems showed real-world value. They proved useful in specific fields. Companies like Digital Equipment Corporation invested heavily. They developed expert systems for their operations. Expert systems saw commercial success in the 1980s. This period helped AI regain its footing. It proved AI could offer practical tools.

Expert systems had limitations. They were hard to update. They lacked common sense. They performed poorly outside their narrow domains. Yet, they kept AI research alive. They showed AI’s potential for specific applications.

The Second AI Winter and the Quiet Rise of Machine Learning

The limitations of expert systems led to another downturn. Developing and maintaining these systems was costly. They struggled with complex, real-world data. They could not learn new rules easily. Over time, enthusiasm waned again. Funding for AI research declined once more. This began the second AI winter. It lasted through the late 1980s and early 1990s. Many AI companies closed. The public lost interest.

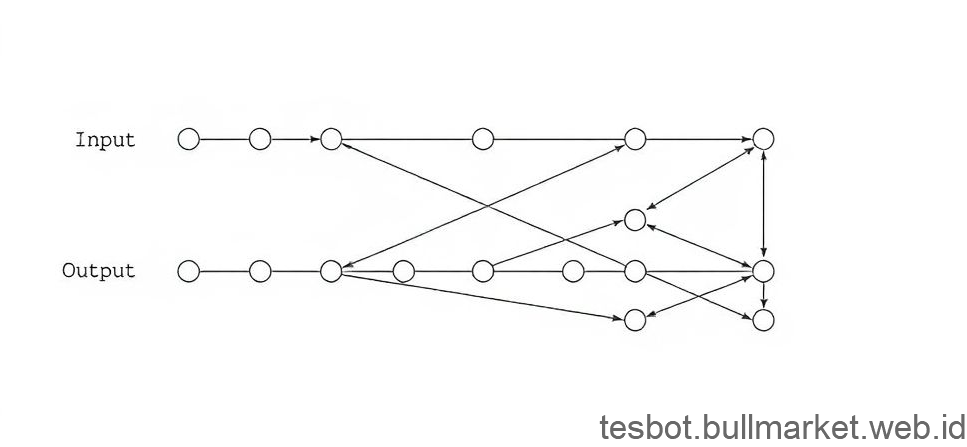

Despite the overall decline, some AI fields progressed quietly. Researchers did not give up. They explored new paths. Machine learning began to grow. This field focused on teaching computers to learn from data. It moved away from explicit rule programming. Neural networks also saw renewed interest. These were computing systems. They loosely modeled the human brain. They could learn from patterns in data. John Hopfield and David Rumelhart made important contributions. Their work helped neural networks advance. Probabilistic models also became important. These models handled uncertainty. They helped make decisions based on likelihoods. These areas did not generate public hype. But they laid critical groundwork.

This quiet period was crucial. Researchers built stronger theoretical foundations. They developed new algorithms. They also improved data processing methods. These advances were less visible. They did not immediately produce breakthrough products. However, they prepared the ground for future success. They fostered a deeper understanding of learning. They also made systems more adaptable. This steady progress eventually paid off. It set the stage for a major revolution in AI.

The Deep Learning Revolution: Big Data and Computational Power Unleashed

The early 2000s brought a turning point for AI. Three factors converged. They fueled a revolution. First, massive datasets became available. The internet created vast amounts of information. This included images, text, and videos. Computers could now train on huge data collections.

Second, computational power increased greatly. Graphics Processing Units (GPUs) became widely available. GPUs were first for video games. They could perform many calculations at once. This made them perfect for training neural networks. This processing power allowed for much larger networks. They could handle more data.

Third, deep learning algorithms saw refinement. These were multi-layered neural networks. They learned complex patterns automatically. Geoffrey Hinton and others made key discoveries. They developed better training methods. These improvements allowed deep neural networks to succeed. They overcame problems from earlier eras.

These three elements led to unprecedented breakthroughs. Deep learning achieved remarkable results. It excelled in image recognition. Systems could identify objects in photos. They could label faces. Natural Language Processing (NLP) also improved. Computers could now understand human language better. They could translate text. They could answer questions. This led to services like Siri and Alexa. Strategic game playing saw major wins. Google’s DeepMind developed AlphaGo in 2016. AlphaGo defeated the world champion in Go. This game is highly complex. The victory showed AI’s new abilities. These achievements captured public attention. They brought AI back into the mainstream.

The deep learning revolution transformed AI. It moved AI from academic labs to commercial products. Many industries adopted deep learning methods. It set new benchmarks for performance. It also opened new areas of research.

AI in the Modern Era: Widespread Applications and Societal Transformation

Today, AI is everywhere. It is no longer a future concept. It is a daily reality. Virtual assistants like Google Assistant and Amazon Alexa use AI. They understand spoken commands. They answer questions. Recommendation engines power online shopping. They suggest movies on streaming services. They help find music. These systems learn from user preferences. They offer personalized content.

Autonomous vehicles rely heavily on AI. Self-driving cars use AI for perception. They sense their surroundings. They use AI for decision-making. They navigate roads. Medical diagnostics use AI to find diseases. AI helps analyze X-rays and MRI scans. It spots patterns doctors might miss. This leads to earlier detection. It also helps with more accurate diagnoses. Financial algorithms use AI to detect fraud. They manage investments. They make trading decisions. AI helps automate complex financial tasks. It identifies risks and opportunities.

AI’s impact reaches many industries. It transforms healthcare. It changes transportation. It shapes entertainment and finance. AI improves efficiency in factories. It helps personalize education. It even assists in scientific research. AI is integrated into daily routines. It streamlines tasks. It provides convenience. It also creates new possibilities. AI continues to evolve. Its applications grow more diverse each year. This widespread use shows AI’s maturity. It marks its move into mainstream technology.

The Future of AI: Ethical Considerations, Challenges, and Emerging Frontiers

AI’s rapid growth brings new questions. Ethical considerations are a major topic. Algorithmic bias is a concern. AI systems can reflect biases from their training data. This can lead to unfair outcomes. For example, some AI facial recognition systems perform worse for certain groups. People debate how to make AI fair. They discuss how to make it transparent. Regulatory frameworks are being developed. These aim to govern AI’s use. They seek to prevent harm. They work to promote responsible development.

Potential job displacement is another challenge. AI automates many tasks. This could change job markets. Some jobs might become less common. New jobs might also emerge. Society needs to prepare for these shifts. Training programs can help workers adapt. Discussions around a universal basic income continue.

Researchers also pursue Artificial General Intelligence (AGI). AGI would possess human-level intelligence. It would learn any intellectual task. It would adapt to new situations. This is still a distant goal. Many challenges remain. The concept of Superintelligence also exists. This would be an AI far smarter than humans. This idea raises questions about control. It also brings concerns about human welfare.

Emerging frontiers include new types of AI. Explainable AI (XAI) aims to make AI decisions understandable. This helps build trust. It helps debug systems. AI is also used in scientific discovery. It helps design new materials. It aids in drug discovery. Quantum computing could also affect AI. It could unlock new levels of processing power. This might lead to even more advanced AI systems. The future of AI holds great promise. It also presents complex challenges. We must approach it with thought and care.

Conclusion

AI has come a long way. It began as an abstract idea. It now powers much of our modern world. Its journey spans centuries. From ancient myths to today’s powerful algorithms, AI’s story is remarkable. We have seen periods of great hope. We have also seen times of disappointment. Yet, research continued. Progress happened quietly. Eventually, deep learning changed everything. AI became a pervasive, indispensable reality.

AI will continue to change. We should explore its ongoing development. We must also engage critically with its societal effects. Consider how AI might shape your own future endeavors. AI’s path is still unfolding. What part will you play in its next chapter?