Artificial intelligence (AI) is part of daily life. It is not a futuristic idea. AI shapes recommendations on streaming services. It runs voice assistants on phones. A key technology in this AI change is neural networks. These networks sound complex. What are they? Are they small digital brains? Do you need a computer science degree to understand them? No. This article explains neural networks. It uses plain language. We will look at what they are. We will see how they learn. We will explain why they work well. You will understand the technology behind modern AI.

Contents

Article Topics

- What a neural network is, using a brain comparison.

- The main parts: neurons, layers, and connections.

- How neural networks learn by trying, failing, and changing.

- Types of neural networks and their specific uses.

- Real-world uses where neural networks have a large effect.

- Common misunderstandings about them.

What Is a Neural Network?

Imagine teaching a child to find a cat. You show them many pictures of cats. You point out whiskers, pointy ears, and a tail. You correct them if they call a dog a cat. The child learns to recognize a cat over time. A neural network works like this. It is a computer model. It takes ideas from the human brain. It finds patterns and makes choices from data. It does not copy the brain directly. It learns from experience like a brain.

Brain Inspiration

Brains have billions of tiny cells. These cells are neurons. Neurons connect. They form a large network. When you learn, these neuron connections change. They get stronger. This builds memories. This also helps gain skills.

Nodes from Neurons

An artificial neural network copies this process. It uses artificial neurons called nodes. Nodes arrange into layers. They share information using connections. This is like biological synapses. A node fires when it gets enough signals. It then sends its signal to the next nodes. Think of nodes as linked decision-makers. Each node gets inputs. It does a simple calculation. It then decides if it sends a signal. It also decides how strong that signal is. Neural networks can do many complex jobs by linking these simple decision-makers.

Basic Parts: Neurons, Layers, and Connections

To know how a neural network works, look at its main parts. These parts are simple alone. They make strong computer structures when put together.

Artificial Neurons (Nodes)

An artificial neuron, or node, is the smallest work unit of a neural network. It acts like a tiny processor.

- Inputs: Each node takes one or more inputs. These come from other nodes or from the first data.

- Weights: Each input connection has a weight. A weight shows how important an input is. A higher weight means that input strongly affects the node’s output. The network changes these weights when it learns.

- Bias: A bias is an extra input for the node. It is always a set value, like 1. Its own weight multiplies it. A bias lets the neuron activate even if inputs are zero. It also moves the activation start point. This helps the network use the data well.

- Summation: The node sums all its inputs. It multiplies each input by its weight. Then it adds the bias. This sum shows the total signal a neuron gets.

- Activation Function: The node sends its sum through an activation function. This function decides if the neuron should fire. It also decides how strong the signal to the next layer should be. Common functions include ReLU, Sigmoid, and Tanh. Each has a different use. They add non-linearity. This helps solve hard problems.

- Output: The activation function gives an output. This output then goes to nodes in the next layer.

Neural Network Layers

Neural networks arrange artificial neurons into layers. Information moves through these layers. It goes from input to output. It usually moves in one direction.

- Input Layer: This is the first layer. Each node here holds a feature of the data. For example, a node might be one pixel in an image of a handwritten digit. It takes the raw data. It then sends it on.

- Hidden Layers: These layers sit between the input and output layers. Most neural networks have one or more hidden layers. Complex calculations happen here. Each hidden layer finds specific features or patterns in data. It takes data from the layer before it. It then sends new representations to the next layer. More hidden layers mean a deeper network. This leads to the term Deep Learning.

- Output Layer: This is the last layer. Its nodes show the network’s final guess or choice. For example, if it classifies animal images, one node might be for a cat. Another is for a dog. The active node shows the network’s best guess.

Connections and Weights (Synapses)

Connections between neurons in different layers are often called synapses. This comes from the brain idea. These connections carry signals from one neuron to the next. Each connection has a weight. Weights are a main part of network learning. They are numbers that show a connection’s strength. The network changes these weights during training. If an input helps make a right guess, its weight gets stronger. If an input is not helpful, its weight might get weaker. It might even be negative. A neural network learns complex patterns. It adjusts weights across millions or billions of connections. This adjustment is what we call learning.

How Neural Networks Learn (Training Process)

Neural networks do not just know things. They learn through a repeating process.

Learning Analogy: Trial and Error

Think of a student studying for an exam. They study material. They try practice questions. They check answers against correct ones. They find their errors. Then they review those areas to improve. They do this again and again. They keep doing it until they get answers right. A neural network learns in a like way.

Forward Propagation: Making a Guess

Learning begins with forward propagation. Data enters the neural network. It moves from the input layer. It goes through hidden layers. It finally reaches the output layer.

- Data Input: You give the network data. This could be an image of a handwritten number. It could be text. It could be sensor readings.

- Weighted Sums and Activation: This data goes through the input layer’s nodes. Each node in later layers takes its inputs. It multiplies them by their weights. It adds the bias. Then it sends the result through its activation function.

- Output Generation: This continues layer by layer. The data reaches the output layer. The output layer then makes a guess or choice. At first, weights are random. The network’s guess is likely wrong.

Cost Function: How Wrong Was the Guess?

The network makes a guess. It needs to know how right that guess was. This is where the cost function works. People also call it a loss function or error function. The cost function finds the error. It checks the network’s guess against the right answer. You give the right answer during training. For example, the network guessed 6. The image was a 9. The cost function gives a high error. If it guessed 9, and the image was 9, the error is low. The training goal is to make this cost function small.

Backpropagation: Fixing Mistakes

This is the main way neural networks learn. Backpropagation is an algorithm. It uses the error from the cost function. It adjusts the network’s weights and biases. Think of it like finding an error backward through the network. The backpropagation algorithm finds how much each weight and bias added to the error. If a weight caused a big error, backpropagation signals a big change for that weight. If a weight had little effect, it will change little. An optimization algorithm adjusts weights. Gradient Descent is one such algorithm. Imagine standing on a mountain. You want to reach the lowest point. Gradient Descent shows you the downhill path. It tells you how big a step to take. The network finds the best weights over time. It takes small steps downhill. This makes the error small.

Iteration and Optimization: Getting Better

The whole process is one training step. This includes forward propagation, cost calculation, and weight adjustment. Neural networks train over many steps. This can be thousands or millions of times. They use a large set of examples. Weights and biases improve slightly with each step. This makes network guesses more accurate. This improvement keeps going. It lasts until the network’s error rate is low enough. This means it has learned data patterns. It can make right guesses on new data.

Types of Neural Networks

Neural networks use the same basic parts. They come in many forms. Each form works well for different tasks.

Feedforward Neural Networks (FNNs): Simplest Form

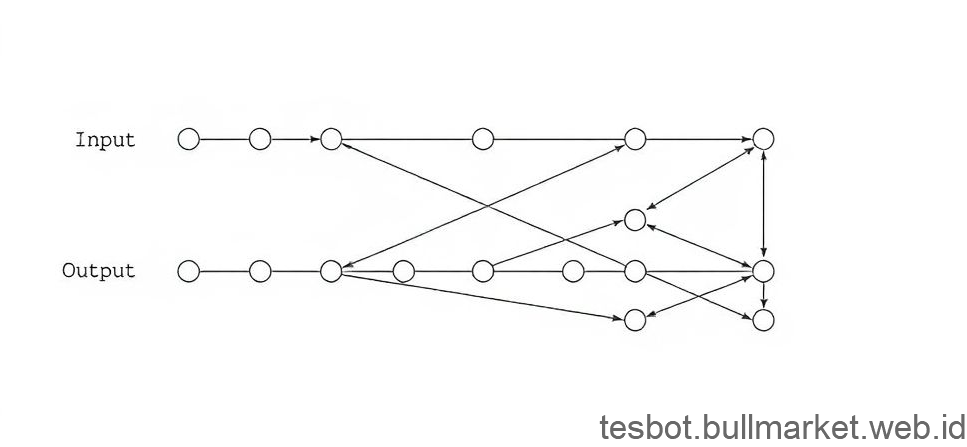

- Description: These are the most basic types. They are also the oldest. Information flows in one direction only. It goes from the input layer to hidden layers. It then goes to the output layer. There are no loops.

- Uses: They handle simple classification. Examples include spam detection. They also solve regression problems. An example is predicting house prices. They learn simple patterns. The examples we talked about mostly show FNNs.

Convolutional Neural Networks (CNNs): Seeing the World

- Description: CNNs are for grid-like data. This includes images. They use layers that apply filters. These layers find features. They find edges, textures, or shapes in an image. They are good at seeing how parts of data relate in space.

- Uses:

- Image Recognition: Finding objects, faces, and scenes in pictures.

- Computer Vision: Self-driving cars use them. They find people, road signs. This helps autonomous driving. Doctors use them for medical images. They find diseases in X-rays or MRIs.

- Video Analysis: Recognizing actions and for surveillance.

Recurrent Neural Networks (RNNs): Remembering Sequences

- Description: RNNs differ from FNNs. They have connections that loop back. This lets information stay from one step to the next. This memory helps them process ordered data. The order of information matters here.

- Uses:

- Natural Language Processing (NLP): Machine translation uses RNNs. Examples include Google Translate. Speech recognition uses them. Examples include Siri and Alexa. They also do sentiment analysis. They create text.

- Time Series Prediction: Predicting stock prices. Forecasting weather.

- Speech Generation: Making speech that sounds human.

Other Networks (GANs, Transformers)

The neural network field grows fast. New designs appear for harder problems.

- Generative Adversarial Networks (GANs): These have two competing networks. A generator and a discriminator. They learn to make new data that looks real. Examples are fake images or videos (deepfakes).

- Transformers: This is a newer design. It is very strong in NLP. It can process sequences at once. It also understands long-range links. Models like OpenAI’s GPT series (ChatGPT) and Google’s BERT use them.

Each network type shows the strength of the neural network idea. They are built to do well with specific data types and problems.

Why Neural Networks Matter: Real-World Uses

Neural networks are not just ideas. They drive many AI tools we use daily. They learn complex patterns from large datasets. This makes them vital in many industries.

Image Recognition and Computer Vision

This is a clear use of neural networks, especially CNNs.

- Facial Recognition: Used to unlock phones. Used in security systems. Used to find people in crowds.

- Self-Driving Cars: Neural networks read camera feeds and sensor data. They find other cars, people, lane lines, and road signs. This allows cars to drive themselves.

- Medical Imaging: They help doctors find issues. They spot tumors in X-rays, MRIs, and CT scans. They often do this faster and better than human checks alone.

- Quality Control: Automated systems in factories use neural networks. They find flaws in products on assembly lines.

Natural Language Processing (NLP)

RNNs and Transformers changed how computers understand and make human language.

- Machine Translation: Services like Google Translate use neural networks. They translate text and speech between languages right away.

- Chatbots and Virtual Assistants: These power tools like Siri, Alexa, and customer service chatbots. They understand your questions. They answer them well.

- Sentiment Analysis: This checks text for emotional tone. It finds positive, negative, or neutral feelings. This helps market research and customer feedback.

- Text Generation: They create human-like articles, stories, code, and poems. ChatGPT models show this.

Predictive Analytics and Recommendation Systems

Neural networks are good at finding hidden patterns in data. They use these to make guesses.

- Financial Forecasting: Predicting stock prices and market trends. Finding fake money transfers.

- Personalized Recommendations: These power suggestion systems. Netflix, Amazon, and Spotify use them. They suggest movies, products, or music based on what you have done.

- Customer Churn Prediction: Finding customers likely to leave a service. This lets companies act.

Healthcare and Medical Diagnosis

Neural networks change many parts of healthcare. This is beyond image checks.

- Drug Discovery: They make finding drug candidates faster. They guess how well drugs will work.

- Disease Diagnosis: They check patient data. This includes symptoms, lab results, and genes. They help find diseases. Examples are diabetes, heart problems, or rare gene issues.

- Personalized Medicine: They create treatments for each person. This uses a person’s unique genes and health details.

Other Uses

Neural networks reach beyond these examples. They touch areas like:

- Robotics: Helping robots see their world. Helping them learn tasks. Helping them work with the world.

- Gaming: Making AI opponents smarter. Making game worlds more real.

- Scientific Research: Building models for complex physical systems. Checking sky data.

These uses show the big power of neural networks. They are not just tools for machines. They are strong ways to find new things and make new ideas.

Common Misunderstandings About Neural Networks

Many people use neural networks. But they often misunderstand them. This leads to big claims or needless fears. We will clear up some common wrong ideas.

They Are Not Conscious

The biggest wrong idea is that neural networks think like humans. People believe they are conscious. They can do complex tasks. They can even learn to create art or write code. But they do not have thoughts. They do not have self-awareness or feelings. They do not truly understand. They are complex machines. They find patterns. They work based on math rules. The brain comparison helps understand their structure. It helps understand their work. Do not treat them like humans.

They Are Not Magic (Just Complex Math)

Neural networks can seem like magic. Their inner workings are not always clear. This is called the black box problem. No magic is involved. Everything they do comes from complex math. It uses statistics. It uses repeated improvements. Their smart behavior comes from millions of simple calculations working together. It does not come from some strange new awareness.

They Need Much Data and Computer Power

Simple neural networks run on regular computers. But new deep learning models need much more. These models get very good results. They need large language models. They need better image recognition systems. They require:

- Huge Datasets: They need millions or billions of examples. This helps them learn well. Humans often label this data carefully.

- Much Computer Resources: Training these models needs strong graphics processors (GPUs). It also needs special AI chips (TPUs). It uses much electricity. They often run on cloud platforms. This is a big problem for many people to start.

The Black Box Problem

Complex neural networks have a problem. This is true for deep ones. We call it the black box nature. Humans often find it hard to know why a network made a choice. It is hard to see how it got its output. Many hidden layers exist. Millions of links connect them. This makes it almost impossible to follow the choice logic step by step. We cannot explain their choices. This is a big area of research now. It is very important in key uses. This includes healthcare or self-driving. Here, knowing why a choice happened is most important. Researchers work on ways to make neural networks more explainable. This is called Explainable AI (XAI). Knowing these points helps place neural networks in a real setting. It helps value their power. It stops extreme claims.

A Quick Guide: Neural Network Parts

This table helps you review neural network parts. It lists key components and their comparisons.

| Component | Description | Simple Comparison |

|---|---|---|

| Node / Artificial Neuron | The smallest work unit. It gets inputs, calculates, and gives an output. | A single brain cell; a small decision-maker. |

| Layer | A group of nodes working together. It does one stage of processing (Input, Hidden, Output). | A processing stage or department. |

| Weight | A number on a connection. It shows the strength of an input signal. The network changes it when learning. | The strength of a link or importance of information. |

| Bias | An extra setting for a neuron. It helps activate it more or less easily. This works regardless of inputs. | A baseline tendency; an initial lean. |

| Activation Function | A math rule. It decides if a neuron fires. It also sets the output signal strength. | A switch or a threshold. |

| Forward Propagation | Sending data through the network. It goes from input to output to get a guess. | Making an initial guess. |

| Cost Function | A math rule. It measures the error between the network’s guess and the right answer. | Measuring how wrong your guess was. |

| Backpropagation | A rule that uses the error. It adjusts weights and biases in the network. | Finding mistakes to correct them. |

| Training Data | Labeled examples (inputs and right outputs). These teach the network. | Practice questions with answers. |

| Epoch | One full pass of all training data through the network. This includes forward and backpropagation. | One full round of studying and testing. |

This table gives you a useful mental map for neural networks.

Conclusion

Neural networks are not mysterious or too complex. They are computer systems. They take ideas from the brain. We saw how simple artificial neurons build these networks. Layers organize them. Adjustable weights connect them. Their intelligence is not natural. They learn through trial, error, and careful changes. This process is backpropagation.

Neural networks are key to much AI change. They recognize faces. They understand your voice. They drive cars. They change medicine. They are strong tools. They find complex patterns in much data. But remember, they are not conscious. They do not work by magic. They are complex math models. People keep making them better. They use them to solve harder problems.

Knowing neural networks is no longer just for experts. It is a basic skill for anyone using modern technology. You have taken a big step. You now understand the power behind today’s artificial intelligence.

See how neural networks affect your daily life. Look at recommendations. Check voice assistants. Learn more about this changing technology. You will value them more as you look further.

`