Neural networks are computer systems. They get their name from the human brain. These systems learn from data. They find patterns. This helps them make predictions or decisions.

Think of a child learning to recognize animals. The child sees many pictures of cats. Each picture shows a cat in a different pose or color. The child’s brain learns what features make something a cat. Neural networks learn in a similar way. They process many examples. Then they find common traits.

Artificial intelligence uses neural networks. They power many tools we use daily. These systems recognize speech. They identify images. They suggest products you might like. Neural networks are key to modern AI.

Their strength comes from their structure. They have many connected parts. Each part does a small job. The parts work together. This allows them to handle complex tasks. They can solve problems that traditional computers find hard.

A simple network might identify a number. A more complex one could drive a car. They perform tasks without being explicitly programmed for every rule. They learn the rules themselves.

We will explore how these systems work. We will break down their parts. You will see how they learn. This guide makes neural networks easy to grasp.

Contents

- 1 How Do Neural Networks Work?

- 2 The Anatomy of a Neural Network

- 3 The Learning Process: Training Neural Networks

- 4 Types of Neural Networks Explained Simply

- 5 Where Do We See Neural Networks in Action?

- 6 The Benefits and Limitations of Neural Networks

- 7 Getting Started: Learning More About Neural Networks

- 8 The Future of Neural Networks and Artificial Intelligence

- 9 Conclusion

How Do Neural Networks Work?

A neural network has layers. Each layer contains artificial neurons. Think of these neurons as small processing units. They are like brain cells. They receive information. They process it. Then they pass it along.

Information enters the network through an input layer. Each neuron in this layer takes a piece of data. For example, a picture of a cat may enter. Each pixel’s brightness becomes a data piece. The input layer sends this data to the next layer.

Hidden layers sit between the input and output. There can be one hidden layer. There can be many. Neurons in hidden layers perform calculations. They apply weights to the incoming data. They also add a bias. These values help the network learn.

A neuron’s output depends on its calculation. An activation function then transforms this output. This function decides if the neuron “fires.” It determines if the signal moves forward. If the signal is strong enough, it goes to the next layer.

The network continues this process. Data flows from layer to layer. Each connection has a weight. The weight shows how important a connection is. The network adjusts these weights during training.

The final layer is the output layer. It gives the network’s answer. This could be “cat” or “dog.” It might be a number for a prediction. The network’s work is a series of simple math steps. These steps happen many times.

The system constantly adjusts. It tries to get the correct answer. This adjustment process makes the network smarter. It is much like learning from mistakes.

The Anatomy of a Neural Network

Understanding a neural network means knowing its main parts. Each component plays a specific role. They all work together for the network’s function.

Inputs and Outputs

Every neural network starts with an input. This input is the data you feed it. For image recognition, the input might be pixels from a picture. For speech, it could be sound waves. Each piece of input data becomes a value. These values enter the first layer.

The network ends with an output. This is the result or prediction. If the network identifies animals, the output might be “cat” or “dog.” If it predicts house prices, the output is a price number. The output layer gives the final answer.

Hidden Layers

Hidden layers are the network’s core. They do the heavy lifting. These layers process the input data. They find patterns within it. A network can have one hidden layer. Some have hundreds. More hidden layers mean a deeper network. They can learn more complex things.

Each neuron in a hidden layer receives data. This data comes from neurons in the previous layer. Each connection has a weight. The neuron multiplies the input by its weight. It sums these weighted inputs. Then it adds a bias value.

Weights and Biases

Weights show the strength of a connection. A high weight means that input is important. A low weight means it is less important. The network learns by changing these weights. Biases are extra values. They help the network shift its output. This allows for more precise learning. Weights and biases are the network’s memory. They store what it has learned.

Activation Functions

After summing weighted inputs and biases, a neuron uses an activation function. This function decides if the neuron should activate. It also shapes the neuron’s output. Common functions include ReLU, Sigmoid, and Tanh. They introduce non-linearity. This lets the network learn complex relationships. Without them, a network could only learn linear patterns.

Imagine a light switch. The activation function is like that switch. It determines if enough signal exists to turn the light on. It then controls how brightly the light shines. This step makes the network powerful. It moves beyond simple on or off decisions.

The full system is a flow. Inputs go through weighted connections. Calculations happen in hidden layers. Activation functions decide output. This repeats until the final answer appears.

The Learning Process: Training Neural Networks

Neural networks do not know things at first. They must learn. This learning happens during a training phase. They need a lot of data for this. This data includes examples and their correct answers.

Training Data

First, we give the network training data. This data has two parts. There are input examples. There are also correct outputs. For example, images of cats are inputs. The label “cat” is the correct output. The network uses this to learn. It sees many pairs of inputs and outputs.

Forward Pass

The network processes an input. It calculates an output. This is a “forward pass.” The data moves through all layers. It generates a prediction. At first, this prediction will likely be wrong. The network is just starting to learn.

Error Calculation

Next, the system compares its prediction to the correct answer. This difference is the “error.” A large error means the network made a big mistake. A small error means it was closer. This error shows how well the network is doing.

Backpropagation

Now, the network adjusts. It sends the error backwards through its layers. This is “backpropagation.” Each neuron sees how much it contributed to the error. It uses this information to adjust its weights and biases. Neurons that caused more error change more. This process helps the network correct itself.

Optimization

The adjustments happen in small steps. This is part of “optimization.” The goal is to reduce the error over time. The network runs through the training data many times. Each time, it refines its weights and biases. It slowly gets better at its task. It makes fewer errors. The network aims for the smallest possible error.

This cycle repeats. The network sees data. It predicts. It measures error. It adjusts. It does this until it performs well. It becomes very good at its job. It can then make accurate predictions on new data. This new data was not part of its training.

Training can take a long time. It needs powerful computers. But the results are often worth it. A trained network can solve complex problems fast.

Types of Neural Networks Explained Simply

Many kinds of neural networks exist. Each type excels at different tasks. Here are some basic types.

Feedforward Neural Networks (FFNNs)

These are the simplest networks. Data moves in one direction. It goes from input to output. It never loops back. FFNNs are good for basic tasks. They can classify simple data. They recognize patterns. They work well for problems where inputs lead directly to outputs. They are often the first type someone learns about.

Convolutional Neural Networks (CNNs)

CNNs are special for image tasks. They see images as grids of pixels. They use “convolutional layers.” These layers scan small parts of an image. They look for features. These features include edges or shapes. They then combine these features. This helps the network recognize objects. CNNs power facial recognition systems. They also help self-driving cars “see.”

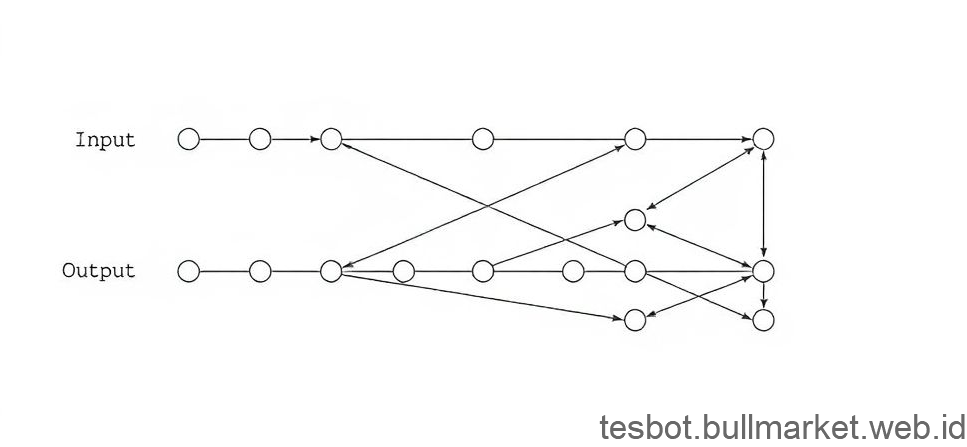

A simple diagram shows data flow. An input image enters. It passes through multiple layers. Each layer extracts more complex features. The final layer identifies the object. This is a basic illustration of how a CNN works with visual information.

Recurrent Neural Networks (RNNs)

RNNs handle sequences of data. This includes text, speech, and time series. They have a memory component. They use information from previous steps. This helps them understand context. For example, in a sentence, the meaning of a word depends on past words. RNNs consider this. They are useful for language translation. They also help with speech recognition. They predict the next word in a sentence.

Other Types

Many other networks exist. Long Short-Term Memory networks (LSTMs) are an RNN variation. They are better at long-term memory. Generative Adversarial Networks (GANs) create new data. They can make realistic images or sounds. Each type has a unique design. This design helps it solve specific kinds of problems. The choice of network depends on the task at hand.

The field grows quickly. New network types appear often. They solve more problems. They get better results. But the core ideas remain similar across all types. They all involve learning from data. They adjust weights and biases. They try to find patterns.

Where Do We See Neural Networks in Action?

Neural networks are all around us. They power many tools and services. You use them even if you do not know it. They have changed many parts of our lives.

Image Recognition

Your smartphone uses neural networks. They unlock your phone with your face. They organize your photos by people or places. Security cameras use them. They identify suspicious activity. This technology helps us categorize visual data fast.

Speech Recognition and Voice Assistants

Voice assistants like Siri or Alexa rely on them. They turn your spoken words into text. They understand your commands. Call centers use them to route calls. These networks make computers understand human language. This has made interactions easier.

Natural Language Processing (NLP)

Neural networks help computers understand text. They power spam filters. They translate languages. They summarize long documents. They also write content. These systems allow computers to read and write. They process vast amounts of human language data.

Recommender Systems

Online stores use them. Streaming services also use them. They suggest products you might buy. They recommend movies you might watch. They learn your preferences from your past actions. This creates a personalized experience. It helps you discover new items.

Healthcare

Doctors use neural networks. They help detect diseases early. They analyze medical images like X-rays or MRIs. They predict patient risks. They can also help discover new drugs. This technology helps save lives and improve care.

Autonomous Vehicles

Self-driving cars use neural networks. They process sensor data. They see roads, signs, and other cars. They make driving decisions. They help cars navigate safely. This technology aims to make transport safer and more efficient.

Financial Services

Banks use them to detect fraud. They analyze transactions. They spot unusual patterns. This helps protect money. They also predict market trends. This helps people make better financial decisions. Neural networks are vital for security and planning.

These examples show their wide use. Neural networks handle complex data. They make smart predictions. They change how we interact with technology. They make our daily lives easier. They perform tasks quickly and accurately. This helps businesses and people.

The Benefits and Limitations of Neural Networks

Neural networks bring many advantages. They also have certain limits. Knowing both helps us use them wisely.

Benefits

Neural networks learn complex patterns. They can find relationships in data. These patterns are too hard for humans to see. They do not need explicit programming for every rule. They adapt to new data. This makes them versatile. They can handle many different tasks. They perform well with noisy or incomplete data. They still give good results.

They offer high accuracy for many problems. Image recognition, speech recognition, and predictions show this. They can process large amounts of data quickly. This makes them ideal for big data challenges. They continue to improve as they get more data. This makes them more effective over time. They can work across various fields. They help solve problems from science to business.

Limitations

Neural networks need vast amounts of data. Training them requires many examples. Small datasets may not produce good results. This data must also be high quality. Errors in data lead to errors in learning.

They need significant computing power. Training large networks can take days or weeks. This uses a lot of energy. It can be expensive. Running them after training also needs good hardware.

One major limit is their “black box” nature. It is often hard to understand why a network makes a certain decision. We know the input and output. The internal steps are complex. This makes it hard to trust them in critical areas. For example, in healthcare, we want to know the “why.” This lack of transparency is a challenge.

They can also be biased. If the training data contains biases, the network learns them. This can lead to unfair or incorrect results. For example, a network trained on mostly male faces may perform worse on female faces. Careful data curation is important.

Finally, they are not a magic solution. They solve specific problems. They do not understand the world like humans do. They lack common sense reasoning. They excel at pattern matching. They do not think like us. We must use them for appropriate tasks. We must also manage our expectations. They are powerful tools, but they have boundaries.

Getting Started: Learning More About Neural Networks

You have learned the basics of neural networks. You know how they work. You understand their parts. You have seen their uses. This knowledge helps you understand AI better. Many resources exist if you want to learn more.

Online courses provide structured learning. Websites like Coursera and edX offer classes. These courses often include exercises. They let you build simple networks. This practical experience helps solidifying your understanding.

Books on artificial intelligence are useful. Look for books on machine learning or deep learning. Start with beginner-friendly titles. They explain concepts with more detail. They also offer deeper insights into theory. Reading different authors can give varied perspectives.

Simple programming projects help. Python is a common language for AI. Libraries like TensorFlow and PyTorch exist. They make building neural networks easier. Try to create a small network. Make it identify numbers or simple images. Hands-on work helps deepen understanding.

Join online communities. Forums and groups discuss AI and neural networks. You can ask questions there. You can learn from others’ experiences. These groups offer support and new ideas. They help keep you updated on new findings.

Follow reputable AI news sources. This field changes fast. New discoveries appear often. Reading articles keeps your knowledge current. Look for trusted publications. They provide accurate information.

Start with one area that interests you. Is it image recognition? Or language processing? Focus on that area first. Then expand your knowledge. Learning is a process. It takes time and effort. But the rewards are great. You will understand the AI world better.

The Future of Neural Networks and Artificial Intelligence

Neural networks drive the future of artificial intelligence. Their capabilities grow rapidly. We will see them in more places. They will solve harder problems. They will affect nearly every industry.

Networks will become even more powerful. Researchers build larger models. These models handle more data. They learn more complex patterns. This leads to higher accuracy. They will perform tasks that seem impossible today.

We may see more specialized networks. Some will focus on very specific medical diagnoses. Others will predict climate changes. They will become more tailored to individual problems. This specialization will make them more effective in narrow fields.

Efforts will focus on making them fairer. Researchers work on reducing bias in training data. They develop methods to explain network decisions. This transparency is important. It builds trust in AI systems. It helps us understand why a decision was made.

Access to these tools will grow. More people will use neural networks. Simple tools will allow non-experts to build AI applications. This will spread AI benefits widely. It will democratize complex technology. Many people will create new uses for AI.

Neural networks will also work with other technologies. They will combine with robotics. This creates smarter robots. They will pair with quantum computing. This could unlock new levels of processing power. The combinations will open new frontiers.

Ethical discussions will continue. We must consider how to use these powerful tools responsibly. We must ensure fairness. We must protect privacy. These conversations shape the future. They guide how AI serves society.

The field of AI is young. Neural networks are a core part of its growth. They promise exciting changes. They will help us solve global challenges. They will make our world smarter. They will bring about new discoveries. This journey has just begun.

Conclusion

Neural networks are powerful tools. They mimic the brain’s learning. They process data in layers. They adjust connections through training. This lets them find patterns and make smart decisions. They power many daily technologies. They recognize faces and understand speech. They help with medicine and self-driving cars.

They offer high accuracy. They learn complex relationships. They do need much data. They also need powerful computers. Understanding their internal steps is hard. They can reflect biases from their training data. We must address these challenges. We must use them wisely. We must develop them responsibly.

Your understanding of these systems has grown. You can now recognize their role. You see their impact on AI. Observe how they shape technology around you. Learn more about specific applications. Explore the ongoing research. Neural networks are a core part of our future.