AI drives many things today. Terms like artificial intelligence, machine learning, and deep learning appear everywhere. What powers many new AI breakthroughs? Neural networks often provide the answer.

Neural networks can sound complex. They resemble something from a sci-fi movie. But their main idea is simple. The human brain inspires them. This article explains neural networks. It simplifies their basic ideas.

You do not need to be a data scientist or a mathematician. You can understand these powerful systems. Are you a curious tech person? Are you a student wondering about AI? Do you want to understand shaping technology? This guide is for you.

Understanding neural networks gives technical knowledge. It shows how intelligent systems learn. It explains how they make decisions. This guide explains several points:

- What neural networks are and their link to biology.

- The basic parts of a neural network: the neuron.

- How these networks learn.

- Different neural network types. What tasks they do best.

- Neural networks work in the real world. They have a big effect.

- Challenges limit this technology.

You will understand neural networks better. You will comprehend new AI advances. These advances change our world.

Contents

What Are Neural Networks? The Brain Idea

Imagine teaching a child to know a cat. You would not give them a math formula for “cat-ness.” You would show them many cat pictures. You point out features like whiskers or ears. You also show pictures of dogs, birds, or cars. The child learns to identify cats. They can spot new cats they have not seen.

Neural networks learn this way. It is a simpler, computer-based method. Neural networks are computer models. They copy the human brain’s connected neurons. They find patterns. They classify information. They make predictions using much data. They do not follow step-by-step rules. They learn rules themselves. They learn from examples.

Biological Idea: The Neuron and Synapses

To understand neural networks, think of their biological basis. Our brains have billions of small cells. These are neurons. Each neuron gets signals from others. Connections called synapses do this. If signals are strong, the neuron fires. It sends its signal forward. This web of firing neurons helps us think. It helps us learn, remember, and see the world.

Artificial neural networks copy this structure. They use artificial neurons instead of biological ones. People call these nodes or units. They have connections between nodes. Each connection has a weight. The weight shows the connection’s strength.

The Basic Part: The Artificial Neuron (Node)

An artificial neuron is a math function. It takes inputs. It does a simple calculation. Then it gives an output. Consider this:

- Inputs: Inputs are numbers fed into the neuron. For the cat example, these are image pixel values.

- Weights: Each input connection has a weight. This weight shows how much an input matters. A higher weight means more influence on the neuron’s output.

- Summation: The neuron multiplies each input by its weight. It sums these weighted inputs. A bias term also adds to this sum. This helps the neuron adjust its output. It adjusts regardless of inputs.

- Activation Function: The sum goes through an activation function. This function decides if the neuron activates. It passes information to the next layer. It adds non-linearity. This helps the network learn complex patterns. Without activation functions, a neural network learns only linear things. Common activation functions include ReLU, Sigmoid, and Tanh.

- Output: The activation function result is the neuron’s output. It becomes input for other neurons later.

This simple process repeats across many neurons and layers. It allows neural networks to build complex data forms.

How Do Neural Networks Learn? The Training Process

Neural networks learn. This is their main feature. Traditional programs use rules. Neural networks learn from data. This learning process repeats. It has several steps.

Layers of Learning: Input, Hidden, and Output

Neural networks have layers:

- Input Layer: The input layer gets raw data. This includes image pixels or words. Each node represents a data feature.

- Hidden Layers: Hidden layers sit between input and output layers. They perform most calculations. They learn features from input data. A network with many hidden layers is a deep neural network. This gives us the term deep learning.

- Output Layer: This layer gives the final result. If the network classifies images, the output layer has a node for each type. The highest node shows the prediction. If it predicts a number, it has one output node.

Forward Propagation: Making a Prediction

Learning starts with forward propagation. Data goes into the input layer. It moves through hidden layers. Each neuron does its math. This continues until the output layer gives a result. This result is the network’s first prediction. Weights and biases often start random. So, early predictions are wrong. Here the network learns.

Backpropagation: Learning from Mistakes (The Core of Learning)

After a prediction, the network needs to check its work. Learning happens with backpropagation.

- Loss Function (Error Measurement): First, the network compares its prediction to the right answer. A loss function measures this error. A high loss means a bad prediction. A low loss means a good prediction. Mean Squared Error and Cross-Entropy are common loss functions.

- Gradient Descent (Weight Adjustment): The network aims to make this loss small. Backpropagation finds how each weight and bias added to the error. It uses an algorithm called gradient descent. This adjusts weights and biases. It changes them to reduce future errors. A golfer adjusts their aim to putt. The network adjusts its weights like this. It moves weights to lower the error.

- Iteration: This process repeats. It does so thousands or millions of times. Each time uses new data. Each repetition is an epoch. The network’s weights and biases improve. It makes more accurate predictions.

This adjustment of weights helps a network learn. It finds complex patterns in data. It extracts features. It makes smart decisions without specific programming.

Different Types of Neural Networks

Neurons, layers, and backpropagation are core ideas. But neural networks have various setups. Each one works best for certain data and tasks. Knowing these types shows the technology’s range.

Feedforward Neural Networks (FNNs)

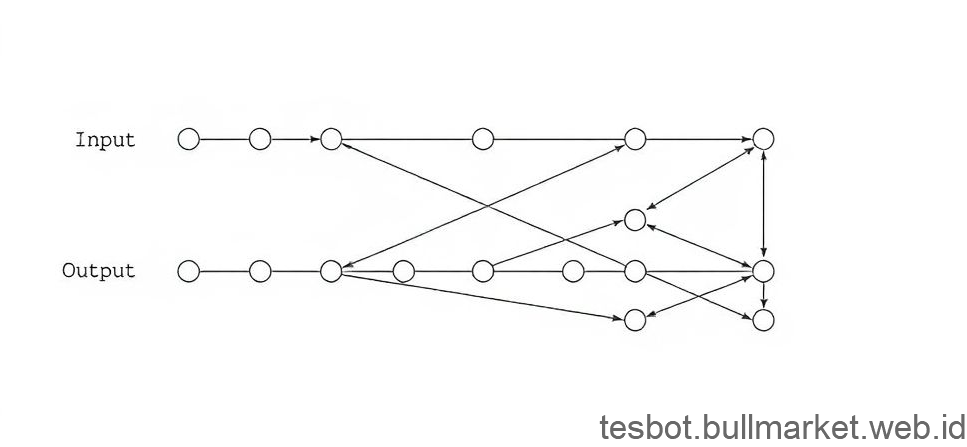

FNNs are also MLPs. They are the simplest neural networks. Information flows one way in an FNN. It goes from input to hidden to output layers. The network has no loops.

- Strengths: They are simple to use. They work well for structured data. This includes spreadsheets. They do classification and regression. Patterns do not depend on order.

- Applications: They classify basic images. This includes numbers. They detect spam. They predict house prices.

Convolutional Neural Networks (CNNs)

CNNs are special neural networks. They process grid-like data. Images or time-series data are examples. Their name comes from a math operation. This operation is convolution.

- Key Idea: CNNs use filters. Filters slide over input data. They find features like edges or shapes. They find them anywhere in an image. These features combine to make bigger forms. CNNs also use pooling layers. These layers reduce data size. This makes the network faster. It makes it strong against small changes.

- Strengths: They process images and video well. They find patterns in spatial data. They resist small shifts or distortions.

- Applications: They do image recognition. This includes faces and objects. They analyze medical images. They work in self-driving cars and content moderation.

Recurrent Neural Networks (RNNs)

RNNs process data in order. The order of information matters here. They have memory. They use past information to affect current output. A hidden layer’s output feeds back as input. This helps for the next step.

- Key Idea: RNNs have loops to keep information. Basic RNNs have gradient problems with long sequences. LSTMs and GRUs are better. They solve these problems. They help remember long-term links.

- Strengths: They work well for sequences. Context is key here. Natural language or time series are examples.

- Applications: They work in NLP tasks. This includes machine translation and speech recognition. They do sentiment analysis and text generation. They help predict stock prices. They also do video analysis.

Generative Adversarial Networks (GANs)

Ian Goodfellow introduced GANs in 2014. They are an interesting type of neural network. They have two competing networks: a Generator and a Discriminator.

- Generator: This network creates new data. It makes it look like real data. It can make fake faces, for example.

- Discriminator: This network acts as a critic. It tells real data from fake data. The Generator makes the fake data.

- The Game: The Generator and Discriminator train together. It is a zero-sum game. The Generator tries to fool the Discriminator. The Discriminator tries to spot fakes. This process helps both networks improve. The Generator creates better fake data.

- Strengths: They create real-looking data. This includes images, audio, or text. It often looks like true data.

- Applications: They generate real human faces. They create art. They improve image resolution. They do data work. They also create deepfakes.

Transformers

Transformers appeared in 2017. They came from the “Attention Is All You Need” paper. They changed NLP. Now they work in computer vision too. They solve some RNN problems. RNNs struggle with parallel computations. They also struggle with long sequences.

- Key Idea: Transformers use self-attention. This lets the network weigh input parts. It processes each element. The model focuses on important words. It finds relevant words anywhere in a sentence. It captures long links well. They process whole sequences at once. This makes training on big data faster.

- Strengths: They are powerful for long sequences. They are very parallel. They understand context and data links well. They set new standards in many NLP tasks.

- Applications: They do machine translation, like Google Translate. They summarize text. They answer questions. They build chatbots. They power large language models such as GPT-3/4, Bard, and LLaMA.

These architectures show field progress. They push AI limits.

Where Do We See Neural Networks in Action? (Real-World Uses)

Neural networks are not just ideas. They power many daily technologies. We often do not know they are there. They learn from much data. This helps them solve hard problems across industries.

Image Recognition and Computer Vision

Computer vision is a big success for neural networks. CNNs are key here.

- Facial Recognition: You unlock phones with your face. You tag friends in photos. Security systems use it at airports.

- Object Detection: It finds objects in images or video. Self-driving cars use it. They see and avoid people, cars, and signs.

- Medical Imaging: It analyzes X-rays, MRIs, and CT scans. It finds diseases like cancer. It spots problems a human eye might miss.

- Retail: It analyzes store customer behavior. It manages inventory. It checks quality on factory lines.

Natural Language Processing (NLP)

Neural networks change how computers use language. RNNs and Transformers are important here.

- Machine Translation: Google Translate is an example. It allows instant language communication.

- Speech Recognition: Siri, Alexa, and Google Assistant are examples. They turn speech into text commands.

- Sentiment Analysis: It finds the emotional tone of text. Businesses use it. They gauge public opinion from social media.

- Text Generation: Autocomplete suggests words. Your phone has predictive text. Large language models write articles, stories, and code.

Recommender Systems

Do you wonder how Netflix suggests movies? Do you wonder how Amazon knows what to sell? Neural networks often power these systems.

- Personalized Content: It analyzes your past viewing and purchases. It recommends new content or products. This matches your choices. It improves user experience.

- Music Streaming: It suggests new artists or playlists. It uses your listening history.

Healthcare

Neural networks work in healthcare beyond imaging. They have various uses.

- Drug Discovery: They find new drug compounds faster. They predict how well drugs work.

- Disease Prediction: They analyze patient data. This includes symptoms and medical history. They predict disease risk.

- Personalized Medicine: They tailor treatment plans. They use a person’s unique biology.

Autonomous Vehicles

Self-driving cars use neural networks. They see their surroundings. They make decisions. They navigate safely.

- Perception: They recognize traffic signs and lane markings. They see other cars, people, and objects. They use cameras and sensors.

- Path Planning: They predict other agents’ moves. They plan safe routes.

- Decision Making: They decide when to speed up, brake, or turn. They change lanes using real-time data.

Financial Forecasting

Neural networks find complex patterns in financial data. Human analysts might miss these.

- Stock Market Prediction: They try to predict stock prices. They use past data and market trends.

- Fraud Detection: They find odd patterns in transactions. They spot fraud in credit cards or insurance claims.

- Algorithmic Trading: They automate trading decisions. They use market analysis.

More Uses

Uses constantly grow:

- Gaming: They create real NPCs. They improve game settings.

- Robotics: They help robots learn skills. Robots adapt to new places.

- Environmental Monitoring: They analyze satellite images. They track forest loss or ice melt. They predict natural disasters.

- Creative Arts: They create music and art styles. They write poetry.

These examples show how neural networks are in our daily lives. They push AI limits.

Building Blocks of a Neural Network

Let’s summarize neural network parts. These parts work together. They allow the network to learn.

| Component | Description | Role in the Network |

|---|---|---|

| Neuron / Node | The basic processing unit. It works like a biological neuron. It takes inputs. It computes and gives an output. | Processes information. Network’s brain cell. |

| Weights | Numbers on each connection between neurons. They show an input’s strength. | Show learned patterns. Adjust during training. |

| Bias | A number added to the neuron’s sum. It comes before the activation function. | Helps neuron activate. It can activate even with zero inputs. |

| Activation Function | A math function for a neuron’s sum. It adds non-linearity. | Decides if neuron fires. It passes information. It helps learn complex patterns. |

| Layers | Neurons group into layers. Input layer gets data. Hidden layers compute. Output layer gives results. | Organizes information flow. Helps extract features. |

| Loss Function | A math function that measures error. It compares network output to the correct output. | Measures network performance. Network aims to reduce this value during training. |

| Optimizer | An algorithm like Gradient Descent or Adam. It changes network weights and biases. It uses the loss function’s output. | Adjusts network parameters. This reduces loss. It improves accuracy. |

| Training Data | Data used to teach the network. It has input examples and correct outputs. | Network learns from this. It helps generalize to new data. |

Each component plays a role. They help the network function and learn. Their interaction lets systems do their tasks.

Challenges and Limitations of Neural Networks

Neural networks offer much potential. They have big successes. But they also have limits. Researchers work to solve these challenges.

Data Dependency

Neural networks need much data. They need much good, labeled training data to work well.

- Data Collection and Annotation: Collecting and labeling millions of examples costs much. It takes much time and effort. Some uses lack enough data.

- Bias in Data: Training data can have bias. It may show some groups too much or too little. The network learns these biases. This leads to unfair results. Examples include loan approvals or facial recognition.

Computational Resources

Training complex neural networks needs much computer power. Deep networks have many layers and settings.

- Hardware: Special hardware helps. GPUs or TPUs are examples. They process data in parallel. They are good for network math operations.

- Energy Consumption: Training big models uses much energy. This raises environmental worries.

- Time: Training can take days, weeks, or months. This is true even with powerful hardware.

Explainability (The Hidden Process Problem)

A big challenge is understanding why a network decides something.

- Lack of Transparency: We see inputs and outputs. Millions of weights and biases are inside. This makes tracing the process hard. It is hard to see how it works inside.

- Trust and Accountability: In critical uses like healthcare or driving, knowing AI reasons is key. This helps trust, fix errors, and legal duties.

- Debugging: The hidden nature makes debugging hard. If a network makes errors, finding the cause is hard.

Overfitting

Overfitting is a common problem. A network learns training data too well. It includes noise and quirks. It fails on new data.

- Memorization vs. Learning: The network memorizes examples. It does not learn patterns. Its performance drops with new data.

- Mitigation: Techniques like regularization help. Dropout layers and early stopping fight overfitting. But it remains a problem in model building.

Adversarial Attacks

Neural networks can be vulnerable. Small changes in input data can fool them. These are adversarial examples.

- Exploiting Vulnerabilities: An attacker adds tiny noise to an image. This tricks the network. It might call a stop sign a yield sign. A human eye would not see the change.

- Security Implications: This creates security risks. It matters for self-driving cars or medical tests.

Clarity vs. Performance Trade-off

A model’s performance and clarity often conflict. Simpler models are clearer but less accurate. Complex networks perform well but are less clear. Researchers work on these challenges in AI. Advances in explainable AI (XAI) help. Better training methods and ethical AI development also help. They make neural networks more reliable and clear. Neural networks have big limits. But their power and progress continue. They will lead AI innovations.

Conclusion

We have explored neural networks. We looked past their complex image. These systems copy the human brain. They learn by adjusting artificial neuron connections. They use processes like forward propagation and backpropagation.

Feedforward Networks are foundational. CNNs work with images. RNNs handle sequences. GANs create things. Transformers master context. Each network type has unique strengths for specific tasks.

Neural networks have a clear, wide impact. They touch nearly all modern life. They power recommendations and voice assistants. They aid medical diagnosis. They help self-driving cars. They are not just theories. They drive today’s AI changes.

Our exploration showed key challenges. They need much data. Training takes much computing power. Explainability is hard. Overfitting and attacks pose risks. These are active research areas. Solving them helps AI use stay responsible and good.

Explore more now. Do not let complexity stop you. Look at open-source AI projects. Read about uses that interest you. Try online AI demonstrations. The best way to know neural networks is to see them work. The AI world changes fast. Understanding neural networks is your key. It helps you navigate this future. It helps you contribute.

“,