Artificial Intelligence (AI) shapes our world. Terms like neural networks often appear. What are these systems? How do they power today’s smart applications? This article explains neural networks simply. It shows how modern AI works inside.

Neural networks are not just terms. They build powerful AI tools. Your phone’s voice assistant uses them. Netflix recommendations come from them. Advanced medical scans and self-driving cars rely on them. Neural networks drive changes in many areas and in daily life. Understanding them helps you grasp technology’s future. This applies to curious beginners, students, or professionals. You will learn how AI learns. You will see how it makes choices. You will understand its steady growth.

This guide will teach you:

- What a neural network is.

- The parts of an artificial neuron and how they connect.

- How neural networks learn from data.

- Types of neural networks and their uses.

- Examples of neural networks working today.

- Their strengths and limits.

- Ways for beginners to explore neural networks.

- A look at their future.

Contents

Neural Networks Explained

Think of a human brain. It learns, sees patterns, and decides. Neural networks are computer models. They copy this brain function. They find patterns. They process data. They learn from experience. This copy works like the human brain.

A Brain Example

Your brain has billions of tiny cells called neurons. These neurons link through pathways called synapses. When you learn, these links get stronger or weaker. A neural network works much the same. It is a big group of connected artificial neurons. They process information together. Like brain neurons send signals, artificial neurons pass data.

The Artificial Neuron (Perceptron)

An artificial neuron is the network’s basic part. It is also called a perceptron. It is simpler than a brain neuron. But it does a key job:

- Inputs: It takes in signals. This is like dendrites getting signals from other neurons.

- Weights: Each signal gets a weight. This weight shows its importance. A higher weight means more effect on the neuron’s output. Think of these as connection strengths.

- Sum: All weighted signals add up.

- Bias: A bias value adds to this sum. The bias acts like a switch. It helps the neuron fire more easily or less easily.

- Activation Function: The sum and bias then pass through an activation function. This function decides if the neuron should fire. It sets the neuron’s output. It adds needed complexity. This lets the network learn patterns. Common functions are ReLU, Sigmoid, and Tanh.

- Output: The activation function’s result is the neuron’s output. This output can then feed into other neurons.

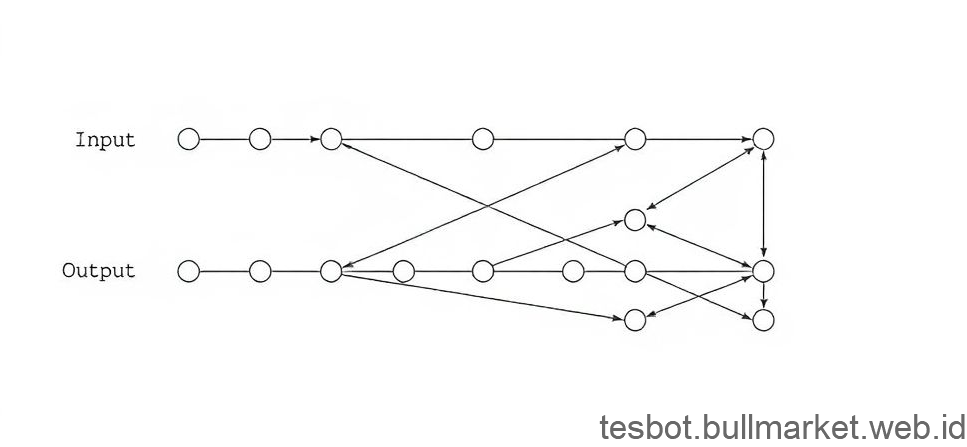

Layers of a Neural Network

Artificial neural networks often arrange in layers. These layers hold connected neurons:

- Input Layer: Here, raw data enters the network. Each neuron in this layer represents one data item. For example, a pixel in an image, a word in a sentence, or a number.

- Hidden Layers: These layers sit between the input and output. One or many hidden layers can exist. They do the core work. They perform complex math. They pull out data features and patterns. A network with many hidden layers is a deep neural network. This gives us the term deep learning. More complex problems might need more hidden layers.

- Output Layer: This layer gives the network’s final result. The number of neurons here depends on the task. For example, a network classifying images into 10 types might have 10 output neurons. Each neuron shows one type.

Weights and Biases: The Network’s Memory

A neural network’s knowledge lives in its weights and biases. These numbers change during learning. When a network does a task well, its weights and biases are set right. This allows it to spot patterns or links in the data. Without correct weights and biases, the network would just give random results.

How Neural Networks Learn

Neural networks learn from data. This is their main strength. They are not programmed for every single case. Their learning process repeats. It works by finding and fixing mistakes.

The Learning Process: A Quick Look

Learning often uses much training data. This data includes inputs with their correct outputs. This is called supervised learning. The network learns through trial and error:

- It makes a guess based on its current weights and biases.

- It checks its guess against the correct answer.

- It changes its internal settings (weights and biases). This reduces the error for later guesses.

This cycle happens thousands or millions of times. The network gets good at its task.

Forward Propagation: Making a Guess

The first step in learning is forward propagation. Input data goes into the network. It moves through hidden layers. It then makes an output.

- Step 1: Input to First Hidden Layer: Input values multiply by their weights. They add up. They pass through an activation function in the first hidden layer’s neurons.

- Step 2: Hidden Layer to Hidden Layer (if many): The outputs from the first hidden layer become inputs for the next. This repeats the weighted sum and activation process.

- Step 3: Last Hidden Layer to Output Layer: The processed information reaches the output layer. This produces the network’s guess.

At this point, the network does not know if its guess is right. It just calculates an output from its current setup.

The Cost Function: Measuring Errors

After a network makes a guess, it needs to check it. This is where the cost function comes in. It is also called a loss function or error function. The cost function finds the difference. It compares the network’s guess to the true correct answer. A higher cost means a bigger error. The network’s guess was far off. The goal of learning is to make this cost function as small as possible. Common cost functions are Mean Squared Error for number predictions and Cross-Entropy for group predictions.

Backpropagation: Changing Weights

This part shows how neural networks learn from errors. It pushes the error back through the network. It goes from the output layer to the input layer. This is called Backpropagation.

- Find Error: The cost function measures the error at the output.

- Spread Error: This error then moves back through the network. Each neuron in hidden layers gets a part of this error. The amount depends on its role in the mistake.

- Change Weights and Biases: Each neuron changes its weights and biases slightly. It bases this on the error it got. These changes would reduce the error. The next forward pass with new weights would be better.

Think of it like a coach giving advice. The output is the team’s game score. If the score is bad, the coach tells each player. The coach explains how their plays added to the loss. Players then change for the next game.

Optimization Algorithms (Gradient Descent)

Backpropagation shows how much each weight or bias needs to change. Optimization algorithms like Gradient Descent decide how to make those changes quickly.

- Gradient Descent: Imagine you are blindfolded on a mountain. You want to reach the lowest spot. Gradient Descent tells you to take small steps. You move in the direction that goes down fastest. In a neural network, it finds the slope of the cost function. This applies to each weight and bias. Then it updates weights and biases opposite to this slope. This moves towards less error.

- Learning Rate: The learning rate is a key setting in gradient descent. It sets the size of the steps down the error mountain. A too-large learning rate might miss the lowest spot. A too-small one might make learning very slow.

The network keeps doing forward passes. It calculates the cost. It pushes the error back. It uses an optimizer to change weights. This way, the neural network steadily tunes its settings. It gets better and better at its job.

Types of Neural Networks

The artificial neuron is the basic part. Neural networks come in many designs. Each design fits different data and tasks. Knowing these types helps you see their full range.

| Network Type | Short Name | Uses | Main Feature(s) |

|---|---|---|---|

| Feedforward Networks | FNN / MLP | Group data, predict numbers, find patterns (general use) | Data moves one way, input to output, no loops. MLPs are a common type with hidden layers. Used as a starting point. |

| Convolutional Networks | CNN | Image recognition, computer vision, video study, face recognition | Processes grid-like data like images. Uses convolutional layers to learn spatial features. Includes pooling and connected layers. |

| Recurrent Networks | RNN | Natural Language Processing (NLP), speech recognition, time series prediction | Has memory. Uses past inputs to affect current output. Good for data where order matters. Faces problems over long sequences. |

| Long Short-Term Memory | LSTM | Better RNN for NLP, speech recognition, music creation | A specific RNN type. It solves the fading gradient problem. It learns long-term links in sequence data using gates. |

| Generative Adversarial Networks | GAN | Image creation (real photos), art, data increase | Two competing networks. A Generator creates false data. A Discriminator tries to tell real from false. They train against each other. This creates very real-looking data. |

| Transformers | Transformers | Advanced NLP (machine translation, text generation, summarizing), computer vision | Changed NLP. Uses attention. It weighs the importance of different parts of the input sequence. It processes sequences at the same time. This handles very long links. It powers models like GPT-3. |

Feedforward Neural Networks (MLPs)

These are the simplest type. Information moves in one direction only. It goes from the input layer. It passes through any hidden layers. It then reaches the output layer. No loops or cycles exist. They are often called Multi-Layer Perceptrons (MLPs). This happens when they have hidden layers and use certain activation functions. MLPs work for general tasks. They group data, like spam emails. They predict numbers, like house prices.

Convolutional Neural Networks (CNNs)

CNNs changed image and video work. Unlike MLPs, CNNs find patterns directly from pixel data. They use convolutional layers. These layers apply filters to inputs. They learn feature groups like edges, textures, and objects. This makes them good at image recognition. They are good at object finding. They help with medical image analysis. Your phone’s face unlock feature likely uses a CNN.

Recurrent Neural Networks (RNNs)

RNNs specialize in sequential data. The order of information matters here. RNNs have loops. Information carries over from one step to the next. This gives them a type of memory. They work well for natural language. Word meaning depends on prior words. They also handle speech recognition and time series predictions. Basic RNNs find it hard to recall long-term links. This led to LSTMs and GRUs.

Generative Adversarial Networks (GANs)

GANs are a unique neural network type. They have two networks working against each other: a generator and a discriminator. The generator creates new data. This might be real-looking images or sounds. The discriminator tries to tell if the data is real or false. They train against each other. The generator gets better. It makes data the discriminator cannot tell from real data. GANs create very real images, art, and data for training other models.

Transformers

Transformers improved Natural Language Processing (NLP) a lot. Unlike RNNs, they do not process data step by step. Instead, they use attention methods. They weigh the importance of different input parts. This allows them to process the whole sequence at once. This lets them handle very long links. They work fast for machine translation. They help with text summarizing. They create human-like text. Models like GPT-3 and GPT-4 use them. Their success also led to their use in computer vision.

Where Neural Networks Work (Real-World Uses)

Neural networks are not just ideas. They power many AI applications. They work across different businesses. They learn from large data sets. This makes them very useful.

Image Recognition and Computer Vision

This is a well-known use. CNNs greatly help here:

- Face Recognition: Unlocking your phone, tagging friends on social media, or security.

- Object Detection: Finding objects in pictures and videos. This helps self-driving cars, security cameras, and factory quality checks.

- Medical Imaging: Finding diseases like cancer or tumors in X-rays and MRI scans. They often do this more accurately than people.

- Retail: Studying shopper movement, shelf layouts, and finding product flaws.

Natural Language Processing (NLP)

Neural networks, like LSTMs and Transformers, changed how machines grasp human language:

- Machine Translation: Google Translate and other services change text between languages.

- Speech Recognition: Voice assistants like Siri or Alexa change spoken words into text.

- Sentiment Analysis: Finding the feeling in text. For example, customer reviews or social media posts. This helps gauge public mood.

- Chatbots and Virtual Assistants: These power AI conversations. They answer questions. They give support. They talk like humans.

- Text Summaries and Creation: They make summaries of long papers. They create new content, articles, and code.

Recommendation Systems

How does Netflix know what movies you might like? How does Amazon suggest products? Neural networks study your past actions. This includes movies watched or items bought. They also look at actions of similar users. Then they guess what you will enjoy next. This personal touch helps keep people using many platforms.

Healthcare and Medicine

Neural networks change healthcare beyond medical images:

- Drug Discovery: Finding new drug candidates. Predicting how well they will work.

- Disease Diagnosis: Looking at patient data. This includes symptoms, lab results, and genes. They help with early and correct diagnosis.

- Personalized Care: Adjusting treatments for each person. This uses their unique genes and health facts.

- Patient Outcome Guessing: Predicting if patients will need to return to the hospital. Guessing how they will respond to treatment.

Self-Driving Cars

Neural networks are very important for self-driving cars:

- Seeing: Recognizing other cars, people, road signs, and barriers. This comes from camera, lidar, and radar data.

- Route Planning: Finding the safest and best way to go.

- Decision Making: Deciding when to speed up, stop, turn, or change lanes. This uses live road conditions.

Money and Fraud Detection

The money industry uses neural networks for safety and clarity:

- Fraud Detection: Finding odd patterns in credit card buys or insurance claims. This may show fraud.

- Automated Trading: Predicting stock market moves. Making trades automatically.

- Credit Scores: Checking credit standing. This uses a wide range of money data.

New Uses

Their uses keep growing:

- Robotics: Robots can sense their surroundings. They can move. They can do complex jobs.

- Weather Modeling: Predicting weather. Understanding climate change effects.

- Science Research: From particle physics to materials science. Neural networks speed up discoveries. They look at huge data sets. They copy complex systems.

Neural Network Strengths and Limits

Neural networks are powerful. But they are not perfect for everything. Knowing their good and bad points helps set clear expectations. It helps use them well.

Strengths

Neural networks give several benefits over old programming methods:

- Good Pattern Finding: Their main strength is finding patterns. They find hard, hidden patterns. They work with large and varied data sets. Humans or old algorithms often miss these.

- Change and Learning from Data: They learn from examples. They do not need programs for every case. This makes them good at adapting to new data. They improve over time. They perform better with more information.

- Handles Noise: They often guess correctly. This happens even with noisy, missing, or slightly wrong data.

- High Work for Hard Tasks: For image work, speech work, and language grasp, neural networks often beat old machine learning tools. They often perform better than humans.

- Works in Parallel: A neural network’s design helps with parallel math. This allows faster training and use. This is true with special hardware like GPUs.

- Feature Pulling: Deep neural networks learn feature groups by themselves. This comes straight from raw data. It removes the need for manual feature work. That work is often long and requires much knowledge in old machine learning.

Challenges

Neural networks have impressive powers. They also have limits:

- Needs Data: Neural networks, especially deep ones, need lots of data. They need much good, labeled training data to work well. Getting and labeling this data can cost much. It takes time. It can be hard.

- Cost of Computing: Training big neural networks takes much computing power. This needs GPUs or TPUs and energy. This can block people or groups with few resources.

- Black Box Problem (Explainability): It is often hard to know why a neural network makes a choice. Many linked neurons and math changes make it hard to trace how it thinks. This lack of clarity is a big worry in areas like healthcare or money. There, being clear is very important.

- Overfitting: A network can overfit. This happens if trained too long or on too little data. It learns the training data perfectly. But it performs poorly on new, unseen data. Methods like regularization and early stopping help fight this.

- Sensitive to Input Changes (Attack): Neural networks can be surprisingly weak. Small, unseen changes to input data can make a network mislabel an image. Or it can make a wrong guess with high confidence. This brings safety risks in sensitive uses.

- Hyperparameter Tuning: Building a neural network means choosing many settings. Examples include layer count, neurons per layer, learning rate, and activation types. Finding the best settings often needs much testing and skill.

- Generalization Issues: They can learn well within their training data. But neural networks often struggle to work well on data that is very different from what they learned.

Getting Started with Neural Networks

Are you ready to learn more? Do you want to try neural networks? Here is a plan for beginners.

Learning Tools

- Online Courses: Sites like Coursera, edX, and Khan Academy have good courses on machine learning. Look for “Neural Networks and Deep Learning” by Andrew Ng or “Deep Learning Specialization.”

- Books: “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville is a main book, but it is advanced. For beginners, books like “Grokking Deep Learning” by Andrew Trask or “Neural Networks and Deep Learning: A Textbook” by Michael Nielsen are good.

- YouTube Channels: Channels like 3Blue1Brown give clear explanations of backpropagation. StatQuest with Josh Starmer and many university lectures show good content.

- Blogs and Guides: Websites like Towards Data Science and Machine Learning Mastery have practical guides and code examples.

Main Programming Languages

- Python: This is the top language for machine learning. It is simple. It has many libraries. It has strong community support. This makes it the right choice.

Popular Tools

Once you know Python, learn a deep learning tool. These tools hide much of the complex math. You can build and train neural networks with few lines of code.

- TensorFlow: Google built TensorFlow. It is a full open-source platform for machine learning. It is sturdy and widely used. Keras is a simpler tool. It runs on TensorFlow. It makes it easier for beginners to start.

- PyTorch: Meta (Facebook) built PyTorch. It is known for its freedom. It has a Python interface. It has a changeable math graph. This makes fixing errors easier. It is popular in research and more often used in business.

- Keras: Keras is easy to use. It runs on TensorFlow. It aims for fast testing and ease of use. This makes it good for beginners.

Simple Projects to Start

The best way to learn is by doing. Start with simple, clear projects:

- MNIST Number Grouping: This is a first deep learning project. Train a neural network to recognize handwritten numbers (0-9). It is a classic and a good first project.

- Image Grouping (e.g., CIFAR-10): Move to grouping small color images. These can be cars, planes, or birds.

- Text Grouping (e.g., Spam Finding): Build a network to group emails as spam or not spam.

- Guessing House Prices: A simple task using a small data set.

Start with data sets ready in the tools. Do not get stuck gathering data or doing complex data preparation for your first projects. Focus on building and training models.

The Future of Neural Networks

Neural networks change technology fast. What we see today is just the start.

Future Progress

- Bigger, More Capable Models: We will see even larger models. They will be more complex. This is true for Large Language Models (LLMs). This is also true for multi-modal AI (text, image, and sound combined).

- Faster Learning: Research continues. It aims to make neural networks learn faster. This includes data needs, computing cost, and energy use.

- Better Use and Strength: People work to make networks less open to attacks. They also work to make them better at learning new situations.

- Neuro-Symbolic AI: This way forward looks promising. It mixes neural networks (pattern finding) with logic-based AI (reasoning). This creates stronger and clearer AI systems.

Ethics and Responsible AI

Neural networks get more common and stronger. Ethical thoughts will matter more:

- Bias and Fairness: Make sure AI models are not unfair to groups. This can happen from biased training data.

- Clarity and Explainability: Develop ways to know why AI models make choices. This is called Explainable AI (XAI).

- Privacy: Keep private data safe. This data is used in training and guessing.

- Security: Guard against bad uses and attacks.

- Rules: Governments and groups will focus more on setting rules for making and using AI responsibly.

More Industry Use

Expect neural networks to spread to more businesses. They will affect more of daily life. This goes from tailored education to art. It includes studying the environment and new materials. Their ability to adapt means new data sources will appear. Neural networks will find new ways to add value. They will lead change.

Conclusion

Neural networks are powerful computer models. They copy the human brain. They form the base of modern Artificial Intelligence. We looked at how single artificial neurons process facts. We saw how they form layers for complex networks. These networks learn from data. They use forward runs, error finding, and weight changes. This learning sets them apart. We also saw types like CNNs for images. RNNs and LSTMs work with sequences. Transformers greatly affect language. They have many uses. These include image work, language work, healthcare, money, and self-driving cars. They have great strengths in pattern finding and adapting. But they also have issues. These include needing much data, high computer costs, and the black box problem.

The world of neural networks is large and active. If this guide made you curious, keep going. The best way to understand them is to try them. Start today. Get a simple data set like MNIST. Pick an easy project. Code it using Python with tools like Keras or PyTorch. AI’s future builds on these ideas. Your path to understanding has just begun.

`,