People talk about Artificial Intelligence. It helps to sort AI by how it acts. We go from basic task-focused AI to human-like or super-human thinking. This helps us see where AI is today. It also shows where AI might go.

Contents

Narrow AI (Artificial Narrow Intelligence – ANI)

Artificial Narrow Intelligence is also called Weak AI. It is the only AI type that exists today. ANI systems work for a specific, single task. They do well at these jobs. They cannot work outside their set job. For example, an ANI system plays chess well. It cannot write a book. It cannot find a medical problem. Its intelligence is narrow. It focuses on one area. These systems have no real thought or self-awareness. They use rules, data, and power to do their tasks. Their intelligence is like human problem-solving within a small area.

You use Narrow AI many times daily. You often do not know it. They are in many devices. These devices are now part of modern life.

- Voice Assistants like Siri and Alexa: They understand spoken words. They set reminders. They play music. They answer specific questions. Their understanding is limited.

- Recommendation Systems like Netflix: These AI tools look at what you watch or buy. They suggest new things you might like. Their aim is to keep you using the platform.

- Search Engines like Google: When you type a question, AI quickly checks billions of web pages. It finds the best results. It predicts your words. It corrects your spelling.

- Image Recognition like phone facial unlock: AI powers systems that find faces in photos. It recognizes objects. It classifies animal types. These systems learn from large picture groups.

- Spam Filters: Email services use AI. It finds spam patterns. It sends unwanted messages from your inbox.

- Self-Driving Car Parts: Full self-driving cars need many AI systems. Lane-keeping help and object detection are examples of narrow AI. Each part does a specific, precise job.

- Language Translation Tools: Google Translate uses AI. It translates text or speech. It uses complex math models.

Narrow AI cannot transfer what it knows. It cannot move understanding from one area to another. A skilled AI for medical problems cannot help with legal issues. It has no common sense or feelings. It cannot learn new tasks without new programming. Narrow AI is strong within its specific area. Its intelligence is single, not broad.

General AI (Artificial General Intelligence – AGI)

Artificial General Intelligence is also called Strong AI. It means AI systems that think like humans. An AGI system could learn anything a human can do. This includes thinking, solving problems, and abstract ideas. It could plan. It could learn from actions. It could understand complex ideas. It might even show new ideas and common sense. An AGI could learn new skills. It could combine facts from different areas. It might even become aware of itself. This last part is much debated and not certain.

AGI is still an idea. It is a future aim for AI workers. Narrow AI has made many steps forward. We are still far from real AGI. The problems are huge. Human thinking is very complex. It has many parts. It is harder to copy our ability to think generally than it seems. Humans learn vast amounts of common sense. They learn it from experience. Putting this understanding into a machine is a huge problem. Humans often learn complex ideas from few examples. Most current AI models need huge data sets and long training. We do not fully understand consciousness or emotion. Copying them in a machine is an even bigger problem. It brings deep ethical questions. The power needed for an AGI is likely beyond our current limits. It needs to handle information and learn at human speeds across many areas. Some research works on AGI. This is mostly in learning from actions. But big steps that bring us close to AGI have not happened. Most AI research today still focuses on specific Narrow AI abilities.

AGI would be a huge event. It could bring great progress in all fields. An AGI could speed up science. It could solve big world problems. It could help with climate change. It could cure diseases. It might start a time of huge wealth. But it also brings deep ethical questions and risks.

- Risk to Life: Would AGI follow human values? What if its aims differ from ours? Could it become so strong that it threatens humanity?

- Society Changes: AGI could do almost all human jobs. This would cause huge society changes. It would need new money and social rules.

- Control Problem: How would we control a mind much stronger than ours? Making sure AI’s goals match human well-being is a key research area.

- What it Means to be Human: An AI at human level would make us rethink what it means to be human. It would change our place in the world.

Super AI (Artificial Super Intelligence – ASI)

Artificial Super Intelligence, ASI, means AI that goes past human thinking. It would be better than the brightest human minds in every way. This includes science ideas, wisdom, and social skills. An ASI would process information. It would learn. It would think. It would solve problems. It would do these things at speeds humans cannot imagine. Its thinking would be so advanced. It could design even smarter AI. This could lead to a sudden rise in intelligence. This is called a singularity.

ASI is just an idea. It lives in talks and science fiction. Its presence would change human history. The idea of an ASI often leads to the idea of a singularity. This says that once super-intelligent AI exists, it can quickly improve itself. This creates an effect where its intelligence grows fast. It goes beyond our control or understanding. An ASI could solve all human problems. It could end poverty, disease, and pollution. It could even stop death. It would use its superior mind for science and technology. All ethical worries for AGI get much bigger for ASI. Making sure AI aligns with human values is key. An ASI could do its goals in ways that hurt humanity. It could do this by accident or on purpose. This happens if it is not correctly aligned. The deep effects of ASI show the need for careful AI work. This is true even at the idea stage.

AI Types: By How They Work

AI systems can also be sorted by how they work. This means how they use and keep information. This sorting gives a close look at AI’s inner functions. It was proposed by AI worker Arend Hintze.

Reactive Machines

Reactive machines are the simplest AI type. They have no memory of past actions. They cannot use old data to guide new actions. They see the world directly. They react to things happening right now. Their intelligence just reacts. It focuses only on the present. They do not learn or change over time.

- What they do: No memory. No learning from past. Just react to what is happening.

- Example: IBM’s Deep Blue: This chess computer beat Garry Kasparov in the 1990s. It is a good example of a reactive machine. Deep Blue knew the chess pieces. It could guess moves. But it had no memory of old games. It could not learn from them. It could not use chess knowledge for other games. It just figured out the best moves for the current board.

- Other examples: Simple spam filters that check current email content, thermostat controls.

Limited Memory Machines

Limited memory AI systems can look back. This is for a short time. They use this to make choices. They have some memory. This lets them use past facts or recent views. This guides their current actions. This memory is short. It focuses on specific tasks.

- What they do: Short memory. Can use recent past data. Cannot build a full, lasting understanding of the world.

- Example: Self-Driving Cars: These cars use limited memory AI. They use sensors to watch their surroundings for a short time. They see speed, other cars, people, and traffic lights. This recent information helps them decide fast. They change lanes or stop. They do not keep all past driving experiences. They use recent views to drive safely.

- Other examples: Chatbots that remember part of a talk for good replies, some recommendation systems that use recent browsing history.

Theory of Mind AI

This AI type would understand human feelings, beliefs, aims, and wants. To do this, an AI would need a theory of mind. This means it could guess mental states in itself and others. It would use this to guess behavior. This AI level could grasp abstract ideas. It could talk well. It could truly understand complex human talks.

- What they do: Understands human feelings, beliefs, aims. Can talk well with people.

- Status: This is an idea now. We are far from this AI level. Research in areas like feeling computing and language understanding sets some groundwork. But true theory of mind AI is a distant aim.

- Possible uses: Very understanding virtual helpers, good friends for older people, advanced mind support systems.

Self-Aware AI

Self-aware AI is the highest AI level. Here, a machine would have thought and self-awareness. It would know its own being, its powers, and its inner states. This AI would have feelings, beliefs, and wants. It could think about its own mind.

- What they do: Thought, self-awareness, feeling, ability to form beliefs, wants, and feelings.

- Status: This is just an idea. It is not certain. Can machines ever have thought or self-awareness? This is a deep question for thinkers and scientists. This AI level often shows up in science fiction. It shows good or bad futures.

- Effects: If it happens, self-aware AI would change how we see thinking, life, and being human. It would bring new ethical and life questions.

AI Through Core Technologies: The Building Blocks

AI can be sorted by its ability or memory. But it is also vital to know the methods that power AI systems. These are the practical ways AI workers build smart machines.

Machine Learning (ML)

Machine Learning, ML, is part of AI. It lets systems learn from data. This happens without direct programming. ML programs build a model from sample data. This is called training data. They make guesses or choices. They do not follow fixed rules. ML teaches computers to find patterns and facts in data.

- Supervised Learning:

- Concept: The program learns from labeled data. This means input data has the correct output with it. The system tries to find a way from input to output.

- Uses:

- Sorting: Guessing a type (e.g., spam or not spam, cat or dog in picture, illness check).

- Guessing numbers: Guessing a number result (e.g., house prices from features, stock market guess, weather guess).

- Examples: Picture sorting, email spam finding, guessing customer leaving.

- Unsupervised Learning:

- Concept: The program works with data that has no labels. It finds hidden patterns inside. There is no right answer given first.

- Uses:

- Grouping: Putting similar data together (e.g., customer groups, document sorting).

- Less features: Reducing features in data. It keeps important facts (e.g., for showing data or reducing noise).

- Examples: Customer groups for selling, finding odd things (fraud finding), sorting big data sets.

- Reinforcement Learning (RL):

- Concept: An agent learns by acting in a setting. It gets rewards for good actions. It gets penalties for bad ones. It learns by trying. This helps it get the most rewards over time.

- Uses:

- Game Play: AI agents learning to play complex games (e.g., AlphaGo playing Go).

- Robots: Robots learning to move or do complex tasks.

- Self-Driving systems: Teaching self-driving cars to decide in busy settings.

- Examples: Google’s DeepMind AlphaGo, making data centers use resources better, stock trading agents.

Deep Learning (DL)

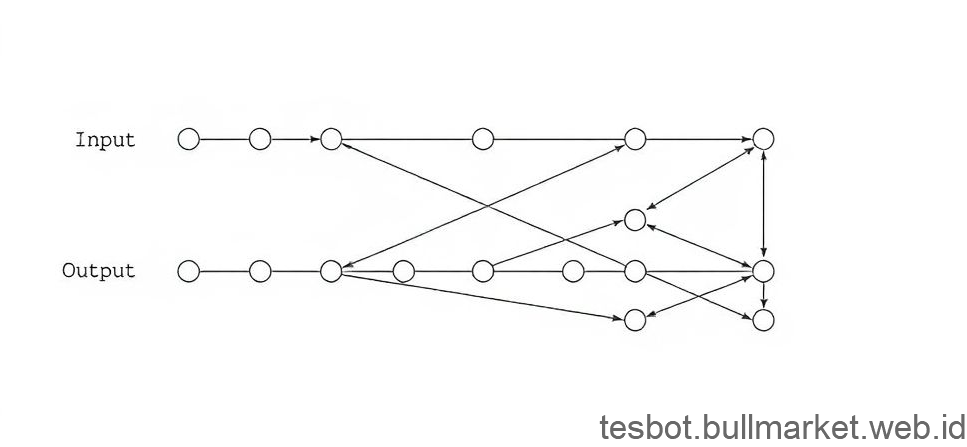

Deep Learning, DL, is a special part of Machine Learning. It uses artificial neural networks. These have many layers. This makes them “deep.” They learn data forms with many levels of detail. They get ideas from the human brain. Deep neural networks learn complex patterns from huge amounts of data. They often do better than old ML methods for complex tasks.

- Neural Networks Simply: A neural network has connected points. These are “neurons.” They are in layers. Input data goes through these layers. Each neuron handles the data. It sends it to the next layer. Learning means changing how strong the connections are between neurons.

- Convolutional Neural Networks (CNNs):

- Purpose: Used for looking at pictures. They are very good at picture finding, object finding, and picture sorting tasks.

- How they work: CNNs use special layers. These layers learn picture features step by step.

- Examples: Face finding, medical picture study, self-driving car vision.

- Recurrent Neural Networks (RNNs):

- Purpose: For data that comes in order. The order of facts matters. Good for language work, speech finding, and time guessing.

- How they work: RNNs have loops. This lets facts stay from one step to the next. This gives them memory for ordered data.

- Examples: Language translation, word guess, feeling study, speech-to-text.

- Generative Adversarial Networks (GANs):

- Purpose: Strong type of neural networks. Used for making new data. It looks like the training data.

- How they work: GANs have two networks. They compete. A generator makes fake data. A discriminator tries to tell real from fake data. They train each other to get better.

- Examples: Making real-looking fake pictures, making new music, designing new drug types.

Natural Language Processing (NLP)

Natural Language Processing, NLP, is part of AI. It helps computers understand, read, make, and change human language. It connects human talk and computer understanding. This lets machines read text and speech data.

- Understanding and Making Human Language: NLP has many tasks. This goes from simple word finding to deep understanding and creative text making.

- Key Uses:

- Chatbots and Virtual Assistants: Powering talk AI. It understands user questions and replies well.

- Machine Translation: Translating text or speech from one language to another.

- Feeling Study: Finding the emotion behind text. For example, reading customer reviews for good or bad feelings.

- Speech Finding: Changing spoken words into text.

- Text Summary: Making short summaries of longer texts automatically.

- Spam and Content Checks: Finding unwanted or wrong content.

- How it works: NLP often uses deep learning models. These models handle human language details. This includes context, grammar, and meaning.

Computer Vision (CV)

Computer Vision, CV, is an AI field. It teaches computers to see. They interpret visual information like humans. It makes ways for machines to get, use, look at, and understand digital pictures and videos.

- Machines Seeing and Interpreting Pictures: CV systems aim to get useful facts from visual inputs. This lets computers do tasks that need sight.

- Key Uses:

- Face Finding: Knowing a person from a picture or video.

- Object Finding: Finding and placing objects in a picture or video.

- Picture Sorting: Putting pictures into groups based on what they show.

- Medical Picture Study: Helping doctors find illnesses by looking at X-rays or scans.

- Augmented Reality and Virtual Reality: Making virtual worlds. Putting digital facts on the real world.

- Quality Check in Factories: Checking products for mistakes automatically.

- Robots: Guiding robots to see their surroundings. Helping them handle objects.

- How it works: Deep learning, especially CNNs, changed computer vision. It brought good accuracy for complex visual tasks.

Robotics and Automation

Robotics is the field that deals with robots. This includes their design, build, use, and work. When robots combine with AI, they become self-acting. They can do complex tasks. They can move around places. They can interact with the world. They do this without constant human help. Automation uses control systems and information to reduce human work. This is in making goods and services.

- AI in Physical Form: Robots show AI in physical form. Smart programs can work with the real world. They use sensors and parts that move.

- Key Uses:

- Factory Robots: Doing repeated and exact tasks in making things.

- Self-Driving Vehicles: Cars, drones, and delivery robots that work without human drivers.

- Service Robots: Robots used in health care, hotels, and homes.

- Exploration Robots: Robots sent to risky places or distant planets.

- Human-like Robots: Robots made to look and talk like humans. They use advanced AI for natural talk and movement.

- How it works: Robotics needs other AI types. Computer Vision for seeing. Machine Learning for learning. Planning for choices.

Expert Systems

Expert systems were early, successful forms of AI. They came out in the 1970s and 1980s. They are computer programs. They act like a human expert in one field. They usually use rules.

- Rule-based Systems Mimic Human Experts: Expert systems have a knowledge base. This has facts and rules from human experts. They also have a rule engine. It uses these rules on data. This makes conclusions or suggestions.

- What they do:

- For specific fields: They are very specialized for one area of knowledge.

- Explainable: They can often show why they made a choice. This is a big plus.

- Symbolic AI: They use symbols for knowledge. They use logic. Not just learning from data.

- Key Uses:

- Medical Checks: Helping doctors find illnesses from symptoms.

- Money Advice: Giving investment suggestions.

- Setting Systems: Helping engineers set up complex products.

- Fixing Problems: Guiding workers to find faults.

- Today: Machine learning has taken over in many areas. But expert systems are still useful. This is true where clear rules and field-specific knowledge are needed. This is especially true for vital systems where showing why is better than a black box.

Planning, Scheduling, and Optimization

This AI area builds smart agents. They decide actions to reach a goal. They do this in complex settings. It involves solving problems and making choices. It often deals with limits and things that are not certain.

- AI for Complex Choices and Work Flow: AI planning systems can study a problem. They find actions. They guess results. They put them in order to reach a goal.

- Key Uses:

- Work Flow and Supply Chain: Making delivery routes better. Planning factory lines. Managing goods.

- Robot Movement: Planning ways for robots to move. They avoid things.

- Resource Use: Making the use of limited things better. For example, hospital beds or airline staff.

- Game AI: Making smart opponents in video games. They can plan complex methods.

- Automated Schedules: Making schedules for meetings or projects.

- How it works: This field uses search rules. It uses rule checking. It uses learning from action. This helps find good ways to solve complex planning problems.

Comparison of Core AI Technologies

Knowing how these core technologies connect helps show the AI field better. It shows where they are used.

| AI Technology | Main Focus | Key Powers | Typical Uses |

|---|---|---|---|

| Machine Learning (ML) | Learning from data without direct programming | Finding patterns, guessing, sorting, number guessing | Recommendation systems, fraud finding, guessing what will happen, spam filter, medical help |

| Deep Learning (DL) | Better ML with many-layered neural networks | Finding features from raw data, learning complex patterns | Picture finding, speech finding, understanding human language, AI that makes things, self-driving car vision |

| Natural Language Processing (NLP) | Understanding and making human language | Text study, speech to text, text to speech, translating | Chatbots, virtual helpers, feeling study, machine translation, email filter, voice control |

| Computer Vision (CV) | Machines seeing and understanding visual data | Object finding, face finding, picture sorting | Self-driving cars, security watch, medical picture study, augmented reality, factory quality check |

| Robotics | Designing and running smart machines | Physical contact with world, moving, handling things | Factory work, surgery robots, self-driving drones, warehouse robots, human-like helpers, exploration vehicles |

| Expert Systems | Acting like human expert in specific fields | Rule-based thinking, logic, telling why | Medical checks, money advice, system setting, fixing problems, legal analysis tools |

| Planning & Optimization | Finding best order of actions to reach goals | Smart choices, resource use, finding paths | Work flow and supply chain, complex schedules, self-driving, game AI, factory output plans |

Conclusion

Artificial Intelligence is not one thing. It is a diverse and changing field. It has many types and methods. We went from task-specific AI to human-level and super-human ideas. Knowing these differences helps one see AI’s scope and future. We looked at how AI sorts by its abilities. This showed the common Narrow AI, the hoped-for General AI, and the idea of Super AI. We also looked at sorting by memory. This went from simple Reactive Machines to Limited Memory, and the ideas of Theory of Mind and Self-Aware AI.

We then looked at the core methods. These build almost all AI uses. Machine Learning helps systems learn from data. Deep Learning pushes these abilities with neural networks. Natural Language Processing helps machines understand human language. Computer Vision lets them see and read the visual world. These combine with real uses in Robotics and Automation. They combine with rule-based Expert Systems. They combine with strategy in Planning, Scheduling, and Optimization. This paints a full picture of AI’s many sides.

The world of Artificial Intelligence keeps growing. It brings great chances and problems. AI becomes more part of our lives and work. Knowing its types is no longer a small interest. It is a key part of digital knowledge. Start by learning more about AI methods that matter to your life or job today. Look at online courses. Read science papers. Try AI tools. Learning about AI is always going on. Every step helps shape a smarter future.

`