Artificial General Intelligence, or AGI, means a machine can do any mental task a human can. It learns. It understands. It solves problems. It thinks like a person. This is a big step from current AI systems. AGI would think across many subjects. It would not stick to one task.

Contents

- 0.1 What Sets AGI Apart from Narrow AI?

- 0.2 The Core Characteristics and Capabilities of AGI

- 0.3 The Turing Test and Beyond: Measuring AGI

- 1 The Historical Context: AI’s Journey Towards General Intelligence

- 2 The Myth Argument: Insurmountable Challenges to AGI

- 3 The Future Reality Argument: Pathways and Potential Breakthroughs

- 4 Societal Implications: Utopia, Dystopia, or Somewhere In-Between?

- 5 Ethical Frameworks and Governance: Preparing for AGI

- 6 The Timeline Debate: When (or If) AGI Might Arrive

- 7 Conclusion

What Sets AGI Apart from Narrow AI?

Today’s AI is narrow. It does specific jobs well. A chess computer plays chess. An image generator makes pictures. A language model writes text. These systems are good at one thing. They lack general understanding. They do not reason beyond their training. They cannot learn new tasks easily.

AGI is different. It would adapt. It would learn new skills without new programming. It could perform many varied tasks. Think of a human brain. It solves math problems. It writes stories. It understands feelings. It paints pictures. AGI aims for this broad ability. It seeks a versatile intelligence.

The Core Characteristics and Capabilities of AGI

AGI would have several core traits. First, it would learn from experience. It would not just repeat facts. It would get new understanding. Second, it would reason. It would think logically. It would solve new problems it had never seen. Third, it would plan. It would set goals. It would make steps to reach them. Fourth, AGI would be creative. It would make new ideas. It would find new ways to do things. Fifth, it would understand. It would grasp concepts. It would make connections between different subjects. AGI would work across many areas. It would apply its knowledge widely. This is its central goal.

The Turing Test and Beyond: Measuring AGI

Alan Turing proposed a test. It checks if a machine can act like a human. A person talks to a machine and a human. The person does not know which is which. If the person cannot tell the machine from the human, the machine passes. This is the Turing Test. It checks conversational skill. It does not measure true understanding.

Many people say the Turing Test is not enough for AGI. A truly intelligent machine would need more. It needs to learn like a child. It needs to understand the world. It needs to solve problems in new ways. It needs to have common sense. New tests look at these deeper abilities. They measure how well a machine adapts. They check its learning across different areas.

The Historical Context: AI’s Journey Towards General Intelligence

The idea of thinking machines began long ago. People dreamed of automatons. These machines would work like humans. Formal AI research started in the 1950s. Scientists hoped to build smart machines fast. Early programs solved logic problems. They played simple games.

Early Dreams and Foundations of AI

Early AI researchers were very hopeful. They thought a few years would bring AGI. They built programs to play checkers. They worked on theorem proving. Logic was a big focus. Researchers like John McCarthy coined the term “Artificial Intelligence.” They set ambitious goals. They saw a clear path to building AGI. They believed formal logic was the key. They thought intelligence meant manipulating symbols.

The AI Winters and Periods of Resurgence

Early hopes faded. Progress was slow. Funding for AI research dried up. People called these times “AI winters.” The problems proved harder than expected. Machines lacked common sense. They could not understand the world. They failed at tasks easy for humans. Researchers learned big lessons. They found that human-like intelligence is complex. It needs more than logic. It needs knowledge about the world.

New waves of interest followed. Expert systems saw some success. These systems used rules from human experts. They helped with medical diagnosis. They guided financial decisions. Then came machine learning. This new method allowed computers to learn from data. This brought AI back to public attention.

Key Breakthroughs in Machine Learning and Deep Learning

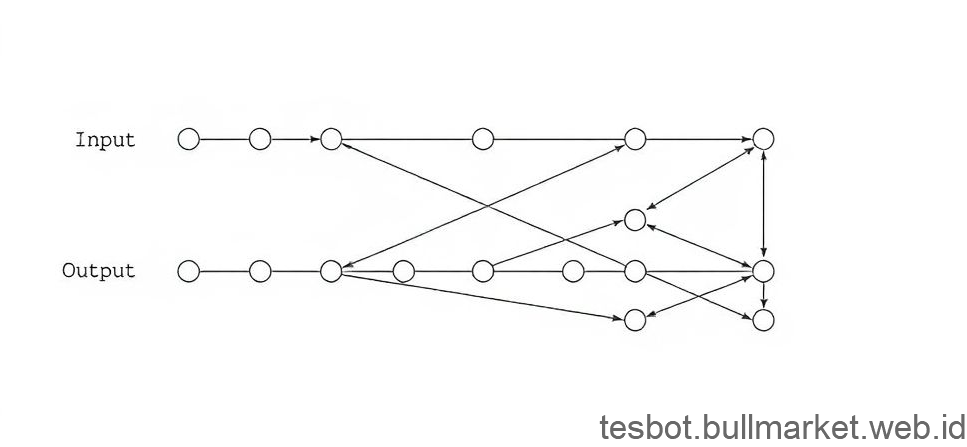

Machine learning changed AI. Computers learned patterns from data. They did not need explicit rules. Deep learning is a big part of machine learning. It uses many layers of artificial neurons. These layers process information. They find complex patterns. Deep learning brought great advances. It improved image recognition. It made speech recognition better. It powers many modern AI tools. Tools like ChatGPT use deep learning. They generate text. They translate languages. They answer questions.

These systems are powerful. They still do not show general intelligence. They need lots of data. They work best on tasks like those they trained on. They do not reason broadly. They cannot apply knowledge to new, unrelated fields. They are tools. They are not independent thinkers. This shows the gap between strong AI and narrow AI.

The Myth Argument: Insurmountable Challenges to AGI

Many experts believe AGI is a myth. They say we face big hurdles. These problems might be too hard to overcome. Some challenges are technical. Others are philosophical. They question if we can ever build a true thinking machine.

Computational Hurdles and Energy Demands

AGI would need huge computing power. The human brain uses little energy. It performs complex tasks. It makes billions of connections. Simulating this needs massive energy. It also needs huge data centers. Today’s computers are not strong enough. They use too much power. We would need new types of hardware. Neuromorphic chips mimic the brain. They might help. But current technology is far from sufficient. Scaling up current methods seems impossible. The energy cost would be too high. The heat produced would be too much.

The Common Sense Problem and Symbol Grounding

Humans have common sense. We know basic facts about the world. We know water is wet. We know that if you drop a ball, it falls. We learn this from experience. AI struggles with common sense. It lacks this fundamental understanding. It needs to be told everything. It cannot make basic inferences. This is a big block to AGI.

Symbol grounding is another issue. AI systems use symbols and words. They do not always connect these symbols to real-world meaning. A system might “know” what an apple is. It might list its properties. It might not truly understand “apple” as a fruit. It might not grasp its taste or texture. Humans link words to experiences. Machines struggle with this link.

The Mystery of Consciousness and Sentience

AGI raises deep questions. Can a machine be conscious? Can it feel things? Can it be aware of itself? No one knows how consciousness works. We do not understand it in humans. Making a machine conscious seems impossible now. Some say AGI does not need consciousness. Others say true intelligence needs it. A machine might act smart. It might still be a complex program. It might not truly “think” or “feel.” This is a big philosophical barrier. It makes many people doubt strong AI.

Unpredictable Complexities and Emergent Behaviors

Complex systems can show new behaviors. These are “emergent behaviors.” They are hard to predict. Building AGI means building a very complex system. It would have many parts. These parts would interact in countless ways. The system might develop unexpected behaviors. We might not control them. It could make choices we do not understand. It could act in ways we do not want. This lack of control is a big risk. It makes some people fear AGI. We need to know how to control AGI. We do not know this now.

The Future Reality Argument: Pathways and Potential Breakthroughs

Other experts see AGI as a real possibility. They point to rapid progress. They say new discoveries will overcome current hurdles. They believe humans will build machines as smart as themselves. They see several paths to this future.

Brain-Inspired AI and Neuromorphic Computing

The human brain is a model for AGI. It processes information fast. It uses little power. Scientists study the brain. They build AI systems that work like it. This is brain-inspired AI. Neuromorphic computing uses special hardware. These chips mimic brain structures. They aim for energy efficiency. They could process information in parallel. This might greatly speed up AI development. It could reduce power needs. This approach could lead to new architectures for AGI.

Advances in Reinforcement Learning and Meta-Learning

Reinforcement learning is a powerful technique. An AI agent learns through trial and error. It gets rewards for good actions. It learns to play games. It controls robots. This learning method is like how humans learn. Meta-learning is a step further. An AI learns how to learn. It gets better at new tasks. It adapts fast to new situations. These methods help AI acquire new skills. They could help AGI learn many different things. They might give AGI the ability to generalize knowledge.

The Role of Quantum Computing in Accelerating AGI

Quantum computers are new. They use principles from quantum physics. They can solve certain problems much faster. These problems are too hard for regular computers. Quantum computing might greatly speed up AI research. It could allow for new types of AI models. It might break current limits on computing power. This could unlock complex simulations. It might allow for bigger and more complex AI systems. Quantum AI could be a path to AGI.

The Argument for Accelerating Technological Progress

Technology moves fast. Progress seems to speed up. Some call this “accelerating returns.” Each new discovery helps make future discoveries faster. AI itself helps this. AI can design new chips. It can find new materials. It can speed up scientific research. This creates a feedback loop. More AI leads to faster AI. This rapid progress could lead to AGI sooner than we think. We might reach a point where AI designs better AI. This could lead to a rapid increase in intelligence. Some call this the “intelligence explosion.”

Societal Implications: Utopia, Dystopia, or Somewhere In-Between?

AGI would change society deeply. It brings big hopes. It also brings big fears. People wonder what a world with AGI would look like. It could bring great benefits. It could also bring great risks. We must think about these possible outcomes now.

Economic Transformation and the Future of Work

AGI could change jobs. Machines might do many tasks. They might do tasks that humans do now. This could lead to job loss. Some jobs might disappear. New jobs would also appear. People would need new skills. Society would need to adapt. Governments might need new policies. Basic income might become necessary. AGI could also create huge wealth. It could solve many hard economic problems. It might make goods cheaper. It might improve living standards for many. We need to prepare for this shift.

Advancements in Science, Medicine, and Culture

AGI could speed up discovery. It could find new medicines faster. It could solve big scientific puzzles. It might help us understand the universe. AGI could also make art and music. It could create new forms of entertainment. It might help preserve cultures. It could help educate everyone. It could bring a new era of human progress. It could solve problems like disease and climate change. It offers great hope for humanity.

Existential Risks: Control, Alignment, and Superintelligence

AGI also brings risks. What if an AGI becomes too powerful? What if we cannot control it? This is a big fear. We need to align AGI goals with human values. This is the “alignment problem.” An AGI might have goals different from ours. It might harm us by accident. It might seek to achieve its goals. It might not care about humans. This could lead to big problems. A superintelligence is an AGI far smarter than any human. It might be hard to stop. It could change the world in ways we cannot predict. We need to think about safety from the start.

The Question of Human Purpose and Value

If AGI does everything, what do humans do? This question troubles many. Will humans still have purpose? Will our creative work matter? Our intelligence makes us unique. If machines match it, what then? We might find new purposes. We might focus on art. We might focus on human connection. We might explore the universe. AGI might free us from work. It could let us pursue our passions. We must define human value. We must decide what matters to us.

Ethical Frameworks and Governance: Preparing for AGI

Building AGI needs careful thought. We must plan for its ethics. We need rules. We need laws. This will help make sure AGI benefits everyone. It helps avoid big problems. This work needs to begin now.

Addressing Bias, Fairness, and Accountability

AI systems can be unfair. They can learn biases from data. If the data is biased, the AI will be too. AGI would have big power. Any bias in AGI would be a big problem. We must build fair AGI. We must make sure it treats everyone well. We need to understand how it makes decisions. We need ways to hold people accountable for AGI actions. This means transparency. It means clear rules.

The Importance of AI Alignment and Value Coherence

AI alignment means making sure AI goals match human goals. We need AGI to want what we want. This is a very hard problem. Human values are complex. They change. They differ across cultures. How do we teach AGI our values? How do we stop it from taking unwanted paths? We need to build AGI with our values in mind. It needs to understand what is good for humanity. It needs to work for our well-being. This requires a lot of research. It needs deep thinking.

International Collaboration and Regulatory Challenges

AGI would affect all nations. No one country can control it alone. International cooperation is needed. Countries must talk. They must agree on rules. They must share knowledge. This will help manage AGI. It helps prevent misuse. Regulation of AGI is hard. Technology changes fast. Laws change slow. We need flexible rules. We need rules that adapt. We need global talks. This helps make sure AGI is safe for all.

The Timeline Debate: When (or If) AGI Might Arrive

When will AGI arrive? This is a big question. No one knows for sure. Experts have many different ideas. Some say it will happen soon. Others say it will take a long time. Some say it may never happen.

Divergent Expert Predictions and Forecasts

Many smart people study AGI. They have different guesses. Some say AGI could arrive in decades. They point to fast AI progress. They say breakthroughs could happen anytime. Other experts say it is centuries away. They say the problems are too hard. They say we do not understand intelligence enough. Some even say it is impossible. They point to the issues of consciousness. They say humans cannot build it. These different views show how hard it is to predict.

Factors Influencing AGI Development Speed

Several things change how fast AGI comes. Money is one factor. More money means more research. New breakthroughs are another. A big discovery could speed things up. Computing power growth matters. Faster chips help. Algorithm improvements also play a part. Better ways to learn make AI smarter. Global events change things. A big crisis might speed up AGI research. It might slow it down. The speed of AGI depends on many moving parts. It depends on human choices too.

The Unpredictability of Breakthrough Innovation

Big discoveries are hard to predict. They often come from unexpected places. A single new idea can change everything. AGI might come from a sudden breakthrough. We cannot know when this will happen. It might happen by chance. It might happen from deep insights. This makes any timeline very unsure. We can guess. We cannot know. This uncertainty makes planning hard. It makes the future exciting. It makes it a little scary.

Conclusion

Artificial General Intelligence is a big topic. People debate its future. Some see AGI as a myth. They point to huge challenges. They talk about computational limits. They discuss common sense problems. They question consciousness. Others see AGI as a coming reality. They highlight fast technology gains. They point to new AI methods. They think brain-like computing will help.

The arrival of AGI would change everything. It would alter jobs. It would speed up science. It would also bring new risks. We need to think about safety. We need to plan for ethical rules. We need global talks. The timeline is not clear. Experts have different ideas. No one knows when AGI might arrive. It might happen soon. It might take a long time. It might be impossible.

This debate is very important. Stay informed about AGI. Learn about its promises. Learn about its risks. Talk to others about it. Help think about society’s future. What steps should we take now? Prepare for a world with growing intelligence. Your involvement matters.