Machines did not exist yet. Still, people imagined intelligent machines. The idea of AI comes from philosophy and math. It also comes from old stories. This early time set the stage for AI as a science.

Contents

Ancient Ideas and Machines

People wanted to build artificial beings. Old myths tell of these desires. Hephaestus had automatons in Greek stories. Ancient China had mechanical birds. Cultures worldwide dreamed of building smart machines. These early ideas were not science. But they made people wonder about intelligence. They wondered if machines could copy it. The Renaissance saw the first machines. Jacques de Vaucanson built a duck in the 1700s. It seemed to eat and digest. This duck showed early control ideas. These were simple clockwork toys. Yet, they showed people’s wish to make machines act like life.

Logic and Early Ideas

AI’s real ideas began with logic and math. George Boole created Boolean logic in the 1800s. This logic used true or false values. It became the basis for digital circuits. This step made thought into math. Charles Babbage designed the Analytical Engine. This was an early computer idea. It had parts for math, memory, and choices. Ada Lovelace wrote its first program. She saw its power for music and art. Her vision is like today’s generative AI. The 1900s brought more ideas. Karel Čapek’s 1920 play *R.U.R.* made ‘robot’ popular. Alan Turing linked logic to machine smarts. In 1950, he wrote a paper. Turing proposed the Imitation Game, or Turing Test. This test judges machine intelligence. He thought of a machine that could do any task. This built the base for computers and AI. Turing helped break the Enigma code in WWII. His work showed machines could process information. This resembled human thinking.

AI Begins: The Dartmouth Workshop (1950-1960s)

The 1950s marked AI’s start as a science. It moved from ideas to tests. This time had great hope. It brought important early successes.

Dartmouth AI Project (1956)

The Dartmouth College workshop started AI. John McCarthy, a math professor, organized it in 1956. He brought together top researchers. Marvin Minsky, Nathaniel Rochester, and Claude Shannon attended. Their plan said: A machine can copy any part of learning. A machine can also copy any smart action. John McCarthy named the field Artificial Intelligence there. People at the workshop wanted to make machines use language. They wanted machines to form ideas. They also wanted machines to solve human problems. The machines should also improve themselves. The workshop did not make a big discovery. But it brought people together. It set a research plan. It gave AI purpose and hope.

Early Programs and Gains

After Dartmouth, the 1950s and 1960s saw new AI programs. These programs showed skills once only for humans:

- Logic Theorist (1956): Allen Newell and Herbert Simon built this at Carnegie Mellon. It proved logic theorems. It matched and beat proofs from *Principia Mathematica*. This was the first program to copy human problem solving.

- General Problem Solver (GPS, 1957): Newell and Simon also made GPS. It tried to solve many problems. It used a method called means-ends analysis. GPS handled logic puzzles and chess. It did not reach general intelligence. But it advanced search methods.

- ELIZA (1966): Joseph Weizenbaum built ELIZA at MIT. This was an early language program. It acted like a therapist. It talked with users. It used keywords and simple answers. Many users thought it was smart, despite its simple design. This is called the ELIZA effect. People believe computer talk is smarter than it is.

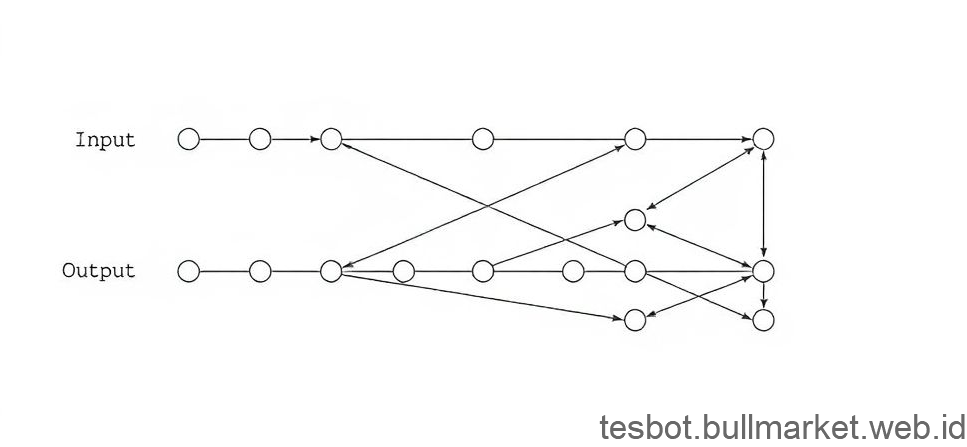

- Perceptrons (1957): Frank Rosenblatt built the Perceptron. This neural network could learn patterns. It had limits. It solved only simple problems. But it set the base for future neural network research.

This time had great hope. Early wins made people think human-level AI was close.

Golden Age and First AI Winter (1960s-1980s)

Early AI successes brought much hope. Funding grew. This time was called the Golden Age. But early methods had limits. Soon, people saw these problems. This led to a time of low spirits. Funding for AI fell. This was the first AI Winter.

Hope and Early Limits

AI research continued with big hopes in the 1960s and 1970s. Shakey the Robot showed new ideas. It combined sight, thought, and action. SRI International built Shakey from 1966 to 1972. Shakey could read orders. It planned actions. Then it did them in the real world. It used a camera and sensors. This was a big step for robot intelligence. But problems grew. Early AI systems had trouble with:

- Little Computer Power: Computers then were slow. They had little memory. Hard problems needed huge computer power. This power did not exist.

- Little Data: Early AI used programmed rules. They did not have large data sets. Data would power machine learning later.

- Fragile Systems: Programs often broke easily. They worked only in narrow settings. They failed with small changes to rules. Making them bigger for real-world use was hard. For example, a program might play chess. But it could not know a cat from a dog. Humans know common things easily. Machines could not learn these things easily.

First AI Winter (1970s)

Big promises for AI did not match real results. This led to doubt. James Lighthill, a British mathematician, wrote a report in 1973. It was critical of AI research in the UK. The Lighthill Report showed AI failed its goals. It said AI would not deliver on its claims. This report, plus similar feelings in the US, cut AI funding. The US Defense Advanced Research Projects Agency (DARPA) cut funds. This time of less money and public interest was the first AI Winter. Many researchers left AI. Those who stayed felt sad. Research moved to smaller, useful problems. It no longer aimed for human-level AI. This paved a path for a useful approach in the next ten years.

AI’s Comeback: Expert Systems (1980s-1990s)

The first AI Winter was cold. But a new idea appeared in the 1980s. Expert Systems brought AI back. This time focused on real-world uses. Then came another slowdown. After that, modern machine learning began to grow.

Expert Systems Grow

Expert systems copied how human experts made decisions. They used a rule base. These rules were often ‘if-then’ statements. An engine used these rules for problems. These systems did not try for general smarts. They focused on specific areas. Human experts were needed in these areas. Key expert systems included:

- MYCIN (1970s, Stanford): MYCIN diagnosed blood infections. It suggested antibiotics. It showed good accuracy. It was often as good as human doctors.

- XCON / R1 (1978, Digital Equipment Corporation): DEC used XCON to set up computers. This task was complex. It had thousands of parts and rules. XCON saved DEC millions of dollars each year. It was a first business win for AI.

Expert systems gained success. XCON helped a lot. Interest and money for AI grew again. Japan started the Fifth Generation Computer Project (1982-1992). It aimed to build smart computers. These computers would handle logic, language, and knowledge. This helped global AI money and research.

Problems and Second AI Winter (Late 1980s/Early 1990s)

The expert systems boom ended fast. By the late 1980s, their limits became clear. This led to a second AI Winter:

- Fragile and Hard to Fix: Expert systems were hard to build. They needed much work to put human knowledge into rules. They also broke easily. They failed when problems were outside their small area. They failed with small changes to rules. Fixing and updating large rule bases cost too much. It took too long.

- Hard to Grow: The number of rules grew huge with complex areas. This made systems hard to manage. They also ran slowly.

- High Cost: Building and keeping these systems cost much money. Often, the cost was more than the gain for businesses.

The market for expert systems fell. AI funding dropped again. Many AI companies closed. Some moved to other fields.

Machine Learning Grows

Symbolic AI slowed down. But a big change quietly began. This was the move to statistical AI, or Machine Learning (ML). Researchers looked at ways for computers to learn from data. They did not use fixed rules. Key new ideas in this change included:

- Backpropagation: This method existed earlier. It gained use in the 1980s. It trained multi-layer neural networks. This let networks learn hard patterns.

- Decision Trees: Algorithms like ID3 (1986) and C4.5 grew popular. They helped with sorting tasks. They offered models people could understand.

- Support Vector Machines (SVMs): SVMs came in the 1990s. They worked well for sorting and predicting. They had strong theories and good results.

- Hidden Markov Models (HMMs): These models became common. They helped with speech recognition and biology data. They could model data in a sequence.

This change deeply altered how AI worked. Researchers no longer put human knowledge directly in. They focused on making algorithms. These found patterns and predicted things from data. This data-based way seemed less grand. But it set the base for huge gains in the 2000s.

Data and Machine Learning (2000s-2010s)

The 2000s began a new AI time. Data grew greatly. Computer power increased much. These helped statistical machine learning grow. This type of AI had been growing quietly.

More Power and Data

More computer power and data helped AI’s return. Machine learning gained much.

- Moore’s Law: Computer power kept growing fast. Processors became faster and cheaper. They ran harder programs. They processed bigger data sets.

- Internet Growth: More people used the internet. This made huge amounts of digital data. Websites, social media, and devices added to this data. This large data gave machine learning programs what they needed to learn.

- GPU Gains: Graphics Processing Units (GPUs) first made game graphics. They also proved good for neural networks. GPUs did many tasks at once. This speeded up training and model complexity.

- Better Algorithms: Many ML programs improved. They became stronger. They could handle larger data sets.

Everyday Machine Learning

With much data and strong computers, ML moved from labs. It went into everyday uses. People often did not know they used AI. Here are some examples:

- Spam Filters: ML programs became good at finding junk mail. They saved users from many unwanted emails.

- Recommendation Systems: Amazon and Netflix used ML. They looked at user habits. They suggested products or shows. This helped business.

- Search Engine Order: Google’s search got better. It used ML to grasp what people searched for. It ranked web pages by how helpful they were. This changed how people found facts.

- Voice Recognition: Voice systems improved with ML. Accuracy grew. Siri and Alexa became good helpers.

Open-source ML tools also grew. Scikit-learn, and platforms like Kaggle, made ML available to more people. This helped new ideas grow together.

Main Points in Machine Learning

This time showed steady gains, not one big event. Here are main moments and tools:

| Year/Period | Event/Technology | Meaning |

|---|---|---|

| Early 2000s | Spam Filters & Fraud Detection | Early, useful ML uses for daily digital safety. |

| Mid 2000s | Netflix Prize (2006-2009) | A contest that made recommendation systems common. |

| 2000s | Google Search Algorithms | ML constantly improved search order. This changed how people found facts. |

| 2000s | Probabilistic Graphical Models | These became key for showing complex links in data. This helped language tasks. |

| 2000s | Ensemble Methods (Random Forests, Boosting) | These showed strong accuracy and strength in ML tasks. |

| 2006 | Geoffrey Hinton’s Work on Deep Belief Networks | This started deep learning. It showed how to train deep neural networks well. |

| 2007 | ImageNet Dataset Initiative | This created a huge, labeled data set. It was needed to train large image recognition models. |

| 2010s | Open-Source ML Libraries Appear | Scikit-learn, TensorFlow, PyTorch made ML building open to more people. |

| 2011 | IBM Watson on Jeopardy! | It showed strong language skills and reasoning. It beat human champions. |

This table shows how data, computer power, and better programs brought machine learning into common use. This set the stage for AI’s next big change.

Deep Learning and Modern AI (2010s – Today)

AI skills grew fast in the 2010s. Deep Learning caused much of this. This big change came from better neural networks. It also came from huge data sets. These moved AI from small uses. AI became a leading technology.

AlexNet and ImageNet (2012)

Neural networks were old ideas. But their real power appeared in the 2010s. This was thanks to two things. Huge amounts of labeled data helped. ImageNet dataset had millions of images. GPU power also helped. The key moment was in 2012 with AlexNet. Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton made this network. AlexNet did much better than old models in the ImageNet photo challenge. It greatly cut errors in image sorting. This showed deep learning’s power. Deep networks could learn hard patterns from data. They did not need special setup. The ImageNet moment led to wide use of deep learning. This happened in many AI areas.

Growth in Many Fields

After AlexNet’s win, deep learning quickly grew. It reached top results in hard areas:

- Natural Language Processing (NLP): Deep learning changed how machines read and write human words. Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTMs) helped. They let models handle words in order. This made speech recognition better. Machine translation also got better. Google’s Transformer came in 2017. This changed NLP. It led to strong models like BERT and GPT. These models learned from much text. They showed new skills in language understanding. They also created text, summarized it, and translated it.

- Reinforcement Learning: Here, AI learns by trying and failing. It learns from its surroundings. This area made big progress. DeepMind’s AlphaGo beat Go champion Lee Sedol in 2016. This was a huge challenge for AI. Go is complex and needs intuition. This showed deep reinforcement learning’s power. It also showed massive computer power.

- Computer Vision: Deep learning helped more than just image sorting:

- Object Finding: It found and placed many objects in a picture.

- Face Recognition: It identified people with near human accuracy.

- Self-Driving Cars: Deep learning runs the seeing parts of self-driving cars. It helps them read sensor data and move in complex places.

- Generative AI: This is a newer, strong growth. Generative AI makes new, unique content. Generative Adversarial Networks (GANs, 2014) made real-looking images and other data. Diffusion Models, with large language models, led to DALL-E, Midjourney, and Stable Diffusion. These make great pictures from simple text. Large language models like ChatGPT showed talking skills and creative writing. This brought AI to everyone.

Ethics and Society

Modern AI grew fast. It brought many chances. But it also brought big problems. AI gets stronger. It joins society more. Worries about its ethics grew much:

- Bias: AI learns from biased data. It can spread and make biases worse. This happens in hiring, loans, or justice.

- Privacy: AI needs much data. Collecting and checking this data raises privacy worries.

- Job Change: AI powered automation makes jobs change. People fear many jobs will go away.

- Robot Weapons: AI-powered weapons are made. These bring hard ethical questions.

- False Info: Generative AI makes very real fake content. This causes worries about false facts. It also causes trust to fall.

Facing these ethics is key. Building good AI rules is a big task. This task is for researchers, leaders, and all of society. Today’s AI is not just about tech limits. It is also about its deep effects on society.

AI’s Future: Chances and Problems

AI’s next part is near. New ideas keep coming fast. The future promises more big changes. It also means we must face hard ethical and social problems.

More Growth Ahead

AI’s path points to new growth areas:

- Artificial General Intelligence (AGI) and Superintelligence: AGI means AI that thinks like a human in many tasks. This remains a key research goal. Beyond AGI is superintelligence. This means AI smarter than humans in almost every area. Many think AGI is decades away. But fast growth in narrow AI makes people discuss this more.

- Multimodal AI and Foundation Models: Today’s research focuses on AI that handles many types of data. This includes text, images, sound, and video. Foundation Models are huge, pre-trained models. They can do many tasks. GPT-4 is an example. These models lead this trend. They aim for wider use and better speed.

- AI in Science and Health: AI helps speed up science. It finds new materials. It designs better drugs. AlphaFold helps with protein folding. AI also helps with personal health care. It diagnoses sickness. It helps with robot surgery. AI will change health care and science.

- Edge AI and Decentralized AI: AI models get better. They move from cloud servers to devices. These devices include phones, sensors, and self-driving cars. This means faster work. It also means more privacy. Less need for constant internet. Decentralized AI, like federated learning, lets models learn from data on many devices. It does not gather private data in one place.

- Human and AI Teamwork: AI will work with people, not replace them. AI will be a strong helper. It will add to human skills. AI helpers, smart design tools, and robots will work with people. They will make work better. They will boost ideas and problem solving in all fields.

AI’s path continues. Its future will come from new tech. Society also shapes it. How society leads and uses AI matters.

- Rules and Control: Governments globally work to set AI rules. They want safety and fairness. They want to avoid stopping new ideas. This includes talks on data privacy. It also covers how AI makes choices. It covers who owns generative AI ideas. It covers using strong AI systems well.

- Public Trust and Knowledge: AI will become more common. Building public trust is key. People need to know AI’s strengths and limits. Facing fears helps. Fighting false facts helps. Teaching about AI helps. These are important.

- Fair Access and Gains: A risk exists. Strong AI’s gains might go to only a few. This could make unfairness worse. We must ensure fair access to AI tech. We must also ensure its gains are shared widely. This is a main problem.

- Good AI Making: We must keep focusing on good AI ideas. These include fairness, openness, and privacy. They also include human watch. Research into explainable AI (XAI) is key. Strong AI systems will also be key. These will build trust and lower risks.

AI’s history shows human skill and drive. It moved from ideas to reality. This path had times of big hopes. It had times of doubt. It also had big gains. AI’s future is not set. People will shape it together. They will balance big chances with the deep duty AI brings.

Conclusion

We have seen AI’s history. It started with old ideas. Alan Turing laid early logic bases. Then it officially began at Dartmouth. We saw AI’s high points and its slow times. These periods tested AI. But they pushed it to use more data and stronger methods. Machine Learning then grew. The Deep Learning Revolution truly helped. Huge data sets and computer power moved AI forward. Now AI is everywhere. It powers our daily picks and voice helpers. It makes big strides in science and art. AI is not a dream. It is a part of today’s world. AI’s growth shows human skill. It shows constant learning. It also shows a steady search for intelligence. AI’s future looks bright. It promises Artificial General Intelligence. It also promises stronger uses. But this brings deep duty. We must build AI ethically. We must share its gains widely. We must place it well into society. Learn about AI today. Look into parts that interest you. Think about robot weapon ethics. Learn about generative AI’s art. See machine learning’s basics. Grasp AI’s future. Use its present. Appreciate AI’s history. It brought us here. Stay curious. Stay informed. Help shape this future.

“,