The idea of artificial beings with intelligence is old. Ancient Greek myths told of automatons like Talos. Talos was a giant bronze man. He protected Crete. Hephaestus had golden maidens who served the gods. These stories show human interest in making life-like entities. These entities could think or act alone.

These were only stories. The real start for AI came much later. It began with philosophy, logic, and mathematics. René Descartes, in the 1600s, asked if intelligence could be copied by machines. The 1700s and 1800s brought progress in logic and math. George Boole created Boolean algebra in the mid-1800s. This gave a symbolic way to show logical thought. It became a base for computer science.

Ada Lovelace was a key person in this time. She worked with Charles Babbage on his Analytical Engine in the mid-1800s. Lovelace saw that the machine could do more than just math. She thought it could act on things other than numbers. She even suggested it could compose music. This idea came long before electronic computers. It showed her as the first person to see general computing use. This eventually led to AI.

The early 1900s brought more theoretical ideas. Kurt Gödel’s work on incompleteness theorems deepened understanding of computation. Alonzo Church’s lambda calculus did the same. These ideas did not directly create AI. They built the intellectual structure for the entire field.

Contents

AI’s Birth (1950s)

Artificial Intelligence as a field began in the mid-1900s. This time saw the start of electronic computers. People felt positive about their use. Alan Turing, a British mathematician, proposed a main idea. In his 1950 paper, ‘Computing Machinery and Intelligence’, Turing asked if machines could think. He then offered a test to answer this. It is now called the Turing Test.

The test involves a human questioner. This person talks with two unseen things. One is a human. One is a machine. If the questioner cannot tell the machine from the human, the machine passes. Turing’s paper set a clear goal for AI research. It also started the idea that intelligence could be about processing information. It was not just a biological thing.

John McCarthy, a computer scientist, coined the term ‘Artificial Intelligence’ in 1956. This happened during the Dartmouth Summer Research Project on Artificial Intelligence. This workshop at Dartmouth College was important. McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon organized it. They brought together leading researchers. Their proposal for the workshop stated their belief. They thought every part of learning or intelligence could be described. This precision would allow a machine to copy it. This declaration set plans for years of research.

The Dartmouth conference is AI’s official birth. It gave the field its formal name. It gathered key people. It laid out a research plan. This plan focused on solving problems, symbolic reasoning, and language work.

Early Enthusiasm (1950s-1970s)

After the Dartmouth workshop, the late 1950s and 1960s saw much interest. There was much research in AI. Funding was ready. Researchers made progress in many areas.

One early AI program was the Logic Theorist (LT). Allen Newell, Herbert A. Simon, and J.C. Shaw developed LT in 1956. LT copied human problem-solving. It proved theorems from Principia Mathematica, a math logic book. It showed computers could do more than count. They could use symbolic reasoning. Newell and Simon then made the General Problem Solver (GPS). It aimed for a universal way to solve problems. This would apply to many tasks.

Joseph Weizenbaum at MIT created ELIZA in 1966. ELIZA was a language program. It acted like a psychotherapist. It had convincing talks. It found keywords and turned user input into questions. ELIZA was simple. But it showed human-computer interaction was possible. It made people think about smart machines. Frank Rosenblatt’s Perceptron (1957) was an early machine learning tool. The Perceptron algorithm recognized patterns. It learned from data for simple classification. It was a step toward modern neural networks.

This time was full of hope. Researchers thought human-level AI was near. Funding groups, like DARPA, gave much money. This was due to the Cold War and space race.

| Year | Event/Milestone | Meaning |

|---|---|---|

| 1843 | Ada Lovelace’s Ideas | She saw machines doing more than numbers. This set a base for general computing. |

| 1950 | Turing Test Proposed | Alan Turing defined a test for machine intelligence. It focused on what machines do. |

| 1956 | Dartmouth Conference | It named Artificial Intelligence. It made AI an official field. It set research goals. |

| 1956 | Logic Theorist (LT) | The first AI program showed human-like problem-solving. It used symbolic reasoning. |

| 1957 | Perceptron Developed | Frank Rosenblatt made a basic neural network. It learned patterns. |

| 1966 | ELIZA Created | This early language program copied conversation. |

The First AI Winter (1970s-1980s)

Early AI research showed limits by the mid-1970s. This led to a time called the AI Winter. Funding and interest in AI research dropped greatly. Several things caused this.

One big problem was early AI systems were fragile. Programs like the General Problem Solver worked well in controlled settings. But they failed with real-world problems. They did not have common sense. They could not use what they knew in new ways. A program understanding language might fail with slang. It might fail with metaphors or unclear phrases.

Another limit was computer power. Early computers were slow. They had little memory. AI tasks needed much more. Recognizing objects in an image needed huge computer power. Understanding spoken language did too. This power did not exist then. Researchers found their prototypes could not grow. Hardware limits stopped them.

The Lighthill Report (1973) in the UK did much harm. The British government asked for it. Sir James Lighthill’s report looked at AI research. It said discoveries had not made the predicted big impact. This report and similar views in the U.S. cut government money for basic AI research. The field entered a hard time. Many researchers moved to focus on more practical, smaller problems.

Expert Systems and Second Wave (1980s)

AI saw new interest after the first AI winter. The 1980s brought focus and money to Expert Systems. These systems copied human expert decisions. They focused on specific areas. Examples include medical diagnosis or financial analysis.

Expert systems focused on small, clear problems. This differed from earlier general AI attempts. They worked by putting large amounts of specific knowledge into rules. Human experts often provided this knowledge. This knowledge base worked with a reasoning engine. This engine applied the rules. Expert systems could then give advice. They explained their thinking. They did tasks that once needed human specialists.

R1/XCON was the most famous example. Carnegie Mellon University developed it for Digital Equipment Corporation (DEC) in 1980. R1 set up VAX computer systems. This was a hard task. It involved picking parts and checking their fit. R1 succeeded. It saved DEC millions of dollars each year. It showed AI’s business use.

R1/XCON’s success led to a business boom. Companies put much money into expert systems. These were for many fields. Manufacturing and finance used them. New AI companies appeared. AI became a popular topic again. But this boom also carried its own end.

The Second AI Winter (Late 1980s-1990s)

The expert systems boom ended quickly. This led to a second AI winter. It was less harsh. It happened in the late 1980s and 1990s. Many things caused this decline.

First, building and keeping expert systems was costly. Getting knowledge from human experts took much time. It was hard. As fields changed, updating the rules was a huge task. Expert systems worked well in small areas. But they were not flexible. They could not handle new situations. They were just advanced lookup tables. They were not truly intelligent.

Second, many companies spent too much on AI. They expected quick and big results. These results did not come. The promises were often bigger than the actual abilities. This led to disappointment. Projects failed to deliver or did not scale. Special hardware (LISP machines) for these systems became old. General computers became more powerful and cheaper.

This time saw less funding. AI research programs at universities shrank. There was general doubt about the field. Many AI researchers moved to related areas. These included database management and statistics. They often avoided the AI label because of its bad name.

Machine Learning’s Rise (1990s-2000s)

Public and funding groups experienced AI winters. But a quiet change happened. Researchers moved from rule-based AI. They went toward a data-driven way. They focused on Machine Learning (ML). This change was important. It built the base for today’s AI.

Machine learning algorithms learn patterns from data. They make predictions or decisions. This is different from programming exact rules. Several things fueled this change.

- Data was available: The internet brought much digital information. This gave fuel for ML algorithms.

- More computer power: Processors became faster and cheaper. This made it possible to train complex models on larger data.

- Better algorithms: Researchers created new ways to learn from data.

Key advances included:

- Support Vector Machines (SVMs): These worked well for classification.

- Decision Trees and Ensemble Methods: These were strong for complex predictions. Examples include Random Forests and Gradient Boosting.

- Probabilistic Graphical Models: These helped show and reason with uncertainty. Bayesian Networks are an example.

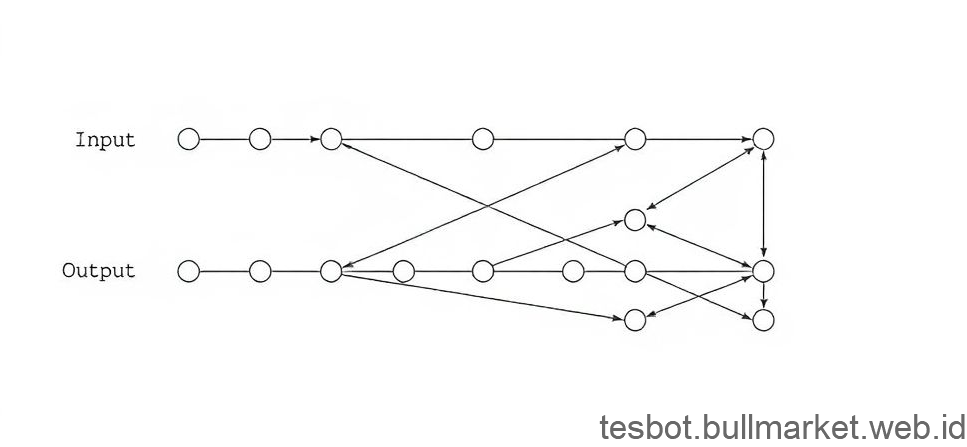

Neural networks also saw new interest. Marvin Minsky and Seymour Papert’s 1969 book, ‘Perceptrons’, highlighted their limits. After this, people mostly dismissed neural networks. But researchers like Geoffrey Hinton found ways to train multi-layered neural networks. They used methods like backpropagation. Big computer problems still existed.

The late 1990s and early 2000s saw important public events.

- Deep Blue vs. Garry Kasparov (1997): IBM’s Deep Blue chess computer beat world champion Garry Kasparov. It used strong search and a big opening book. It did not use modern learning algorithms. But it showed growing computer power and AI engineering. It got global attention.

- Data Mining: Using ML to find patterns in large data grew popular. Businesses and science used it. They often avoided the term AI.

This time had a more practical view of AI research. It focused on building systems. These systems solved specific problems. They used statistical methods. They did not try to copy human reasoning.

Deep Learning and Modern AI (2010s-Present)

The 2010s marked a big shift. It brought the Deep Learning Revolution. AI abilities grew faster than ever. Three things caused this:

- Massive Data: Data from the internet, social media, and sensors grew fast. It gave endless training material for models.

- Cheap Computer Power (GPUs): Strong Graphics Processing Units (GPUs) first made for games worked well. They did the parallel math needed to train big neural networks.

- Algorithm Improvements: New neural network designs and training methods helped. These included Rectified Linear Units (ReLUs) and dropout. They allowed training of much deeper networks. They avoided issues like vanishing gradients.

Deep Learning is a part of machine learning. It uses neural networks with many layers. These networks learn data patterns. They find features at different levels. For example, in image recognition, one layer finds edges. Another layer finds shapes. A final layer finds whole objects.

Key events from this time include:

- ImageNet Challenge (2012): A deep learning model, AlexNet, did much better than past methods. It greatly lowered image classification errors. Many say this started deep learning’s big rise.

- AlphaGo (2016): Google DeepMind’s AlphaGo beat Go player Lee Sedol. Go is harder than chess. It had been a big AI challenge. AlphaGo’s win showed AI could master tasks needing much thought and strategy.

- Natural Language Processing (NLP) Advances: New transformer designs changed NLP. Google’s BERT and OpenAI’s GPT series are examples. These models understand and create human language. They do this very well.

- Generative AI (2020s): New models like DALL-E (image creation from text) and Stable Diffusion came out. Large language models (LLMs) like GPT-3, GPT-4, and Gemini brought AI to everyone. These models create text, images, and code. This work often looks like human work. It opens many new uses.

Modern AI affects almost every field. It helps with shopping and streaming shows. It drives cars alone. It helps with medical diagnoses. It aids scientific discovery. AI is not a future idea. It is a daily thing.

AI’s Future: Chances and Problems

AI faces a new chapter. The future holds big chances and problems.

Chances

- Changing Industries: AI keeps changing health care. It helps find drugs and make personal medicines. It helps finance with fraud detection. It helps transport with self-driving cars. It helps education with personal learning. It helps many more fields.

- Solving Big Problems: AI can speed up answers to world issues. Examples include climate change and disease. It helps with poverty.

- Boosting Human Abilities: AI often helps humans. It acts as a powerful assistant. It boosts human intelligence and output. Tools that sum up information or create content are common.

- Scientific Discovery: AI helps scientific research greatly. It aids protein folding. It helps with material science and space studies. It allows findings impossible with old methods.

Problems

- Ethical Issues: AI algorithms can show bias. Privacy concerns exist with data use. Who is responsible for AI decisions? Misuse of AI (e.g., deepfakes, weapons) needs thought. We need strong rules.

- Job Changes and Money Gaps: AI automates tasks. This causes worry about jobs. Will AI’s benefits be spread fairly?

- Safety and Control: Powerful AI systems raise questions. How do we keep them safe? How do we prevent bad results? How do we keep human control? Artificial General Intelligence (AGI) brings deep questions about danger to existence.

- Responsible Work: AI must be made and used with care. It needs to be clear and match human values. This needs groups to work together. Researchers, lawmakers, and the public must help.

AI’s path has been notable. It had times of hope, disappointment, and success. From its early ideas to today’s deep learning, AI has always pushed what machines can do. The road ahead is complex. But understanding AI’s past helps us face its future. We can use its good sides and lower its risks.

Conclusion

We have explored AI’s history. It goes from old dreams of intelligent machines to today’s data systems. We saw how early thought set the stage. The Dartmouth Conference started the field. Early hope gave way to AI winters. Then machine learning grew quietly. Deep learning grew fast. This brought AI to its current state. People like Alan Turing and Ada Lovelace are key figures. Events like Deep Blue winning and the ImageNet challenge shaped this. Today, AI is part of daily life. It drives many fields. AI’s future promises new things. It offers strong tools to solve human problems. Learn about AI’s potential. See how AI impacts your field. Understand how these systems work. Knowing AI’s past and present helps shape its future. We can do this with care and good values.